Prepare–加载解码器,数据缓存的初始化 通过setDataSource设置播放资源后。就可以调用Prepare方法为播放做准备了。Prepare的整个流程是最为复杂的一个阶段,从整体上可以分成两大部分,第一部分是解码器的加载,第二部分是数据缓存的设置,Prepare之前的调用流程和setDataSource一样都是通过Java层到jni层再到native层,这部分就不做过多的介绍了,这部分的代码如下。

public void prepare () throws IOException, IllegalStateException { _prepare (); scanInternalSubtitleTracks (); }

private native void _prepare () throws IOException, IllegalStateException

static void android_media_MediaPlayer_prepare(JNIEnv * env , jobject thiz ) { sp<MediaPlayer> mp = getMediaPlayer(env , thiz ) ; if (mp == NULL ) { jniThrowException(env , "java/lang/IllegalStateException" , NULL) ; return; } sp<IGraphicBufferProducer> st = getVideoSurfaceTexture(env , thiz ) ; mp->setVideoSurfaceTexture(st ) ; process_media_player_call( env , thiz , mp ->prepare () , "java/io/IOException" , "Prepare failed." ); }

status_t MediaPlayer::prepare () Mutex::Autolock _l(mLock); mLockThreadId = getThreadId (); if (mPrepareSync) { mLockThreadId = 0 ; return -EALREADY; } mPrepareSync = true ; status_t ret = prepareAsync_l (); if (mPrepareSync) { mSignal.wait (mLock); mPrepareSync = false ; } mLockThreadId = 0 ; return mPrepareStatus; }

我们从这里开始:

status_t MediaPlayer::prepareAsync_l(){ if ( (mPlayer != 0 ) && ( mCurrentState & (MEDIA_PLAYER_INITIALIZED | MEDIA_PLAYER_STOPPED ) ) ) { if (mAudioAttributesParcel != NULL) { mPlayer->setParameter(KEY_PARAMETER_AUDIO_ATTRIBUTES, *mAudioAttributesParcel); } else { mPlayer->setAudioStreamType(mStreamType); } mCurrentState = MEDIA_PLAYER_PREPARING; return mPlayer->prepareAsync(); } return INVALID_OPERATION; }

在StagefrightPlayer中只是简单地调用AwesomePlayer的prepareAsync

status_t StagefrightPlayer::prepareAsync () return mPlayer->prepareAsync (); }

status_t AwesomePlayer::prepareAsync () ATRACE_CALL (); Mutex::Autolock autoLock (mLock) ; if (mFlags & PREPARING) { return UNKNOWN_ERROR; } mIsAsyncPrepare = true ; return prepareAsync_l (); }

在AwesomePlayer类的prepareAsync_l方法中将会创建一个AwesomeEvent,启动Queue,将创建的mAsyncPrepareEvent post到Queue中。

status_t AwesomePlayer::prepareAsync_l() { if (mFlags & PREPARING) { return UNKNOWN_ERROR; } if (!mQueueStarted) { mQueue.start() ; mQueueStarted = true ; } modifyFlags(PREPARING, SET) ; mAsyncPrepareEvent = new AwesomeEvent(this , &AwesomePlayer::onPrepareAsyncEvent ) ; mQueue.postEvent(mAsyncPrepareEvent ) ; return OK; }

在继续介绍prepare流程之前我们先来看下TimedEventQueue这个类。从名称上看它是一个事件队列。先来看下它的构造方法,这里很简单只是给它的成员变量初始化,并绑定一个DeathRecipient.

TimedEventQueue::TimedEventQueue() : mNextEventID(1) , mRunning(false ) , mStopped(false ) , mDeathRecipient(new PMDeathRecipient(this ) ), mWakeLockCount(0) { }

在start方法中创建一个ThreadWrapper线程。

void TimedEventQueue::start() { if (mRunning) { return; } mStopped = false ; pthread_attr_t attr; pthread_attr_init(&attr ) ; pthread_attr_setdetachstate(&attr , PTHREAD_CREATE_JOINABLE) ; pthread_create(&mThread , &attr , ThreadWrapper, this ) ; pthread_attr_destroy(&attr ) ; mRunning = true ; }

void *TimedEventQueue::ThreadWrapper(void *me) { androidSetThreadPriority (0 , ANDROID_PRIORITY_FOREGROUND); static_cast<TimedEventQueue *>(me)->threadEntry (); return NULL; }

在ThreadWrapper线程中将会不断循环查看消息队列中的每个Event,看下是否达到执行的时间,如果消息队列为空则将会等待,如果达到超时时间10秒则会退出线程,如果在超时时间之前达到它的执行时间则调用该Event的fire方法。

void TimedEventQueue::threadEntry () prctl (PR_SET_NAME, (unsigned long )"TimedEventQueue" , 0 , 0 , 0 ); for (;;) { int64_t now_us = 0 ; sp<Event> event; bool wakeLocked = false ; { Mutex::Autolock autoLock (mLock) ; if (mStopped) { break ; } while (mQueue.empty ()) { mQueueNotEmptyCondition.wait (mLock); } event_id eventID = 0 ; for (;;) { if (mQueue.empty ()) { break ; } List<QueueItem>::iterator it = mQueue.begin (); eventID = (*it).event->eventID (); now_us = ALooper::GetNowUs (); int64_t when_us = (*it).realtime_us; int64_t delay_us; if (when_us < 0 || when_us == INT64_MAX) { delay_us = 0 ; } else { delay_us = when_us - now_us; } if (delay_us <= 0 ) { break ; } static int64_t kMaxTimeoutUs = 10000000ll ; bool timeoutCapped = false ; delay_us = kMaxTimeoutUs; timeoutCapped = true ; } status_t err = mQueueHeadChangedCondition.waitRelative ( mLock, delay_us * 1000ll ); if (!timeoutCapped && err == -ETIMEDOUT) { now_us = ALooper::GetNowUs (); break ; } } event = removeEventFromQueue_l (eventID, &wakeLocked); } if (event != NULL ) { event->fire (this , now_us); if (wakeLocked) { Mutex::Autolock autoLock (mLock) ; releaseWakeLock_l (); } } } }

fire 方法里面直接调用AwesomeEvent中mPlayer的mMethod方法,这个mMethod也就是我们在new AwesomeEvent时候传递进去的onPrepareAsyncEvent。

struct AwesomeEvent : public TimedEventQueue::Event {AwesomeEvent(AwesomePlayer * player ,void (AwesomePlayer::* method ) () ) : mPlayer(player ) , mMethod(method ) { } protected: virtual ~AwesomeEvent() {} virtual void fire(TimedEventQueue * , int64_t ) { (mPlayer->*mMethod)() ; } private : AwesomePlayer *mPlayer; void (AwesomePlayer::*mMethod)() ; AwesomeEvent(const AwesomeEvent &) ; AwesomeEvent &operator=(const AwesomeEvent &); };

所以我们需要看下AwesomePlayer 下的onPrepareAsyncEvent方法。在onPrepareAsyncEvent

void AwesomePlayer::onPrepareAsyncEvent() { Mutex::Autolock autoLock(mLock ) ; begin PrepareAsync_l() ; }

void AwesomePlayer::begin PrepareAsync_l() { if (mFlags & PREPARE_CANCELLED) { ALOGI("prepare was cancelled before doing anything" ) ; abortPrepare(UNKNOWN_ERROR) ; return; } if (mUri.size() > 0 ) { status_t err = finishSetDataSource_l() ; if (err != OK) { abortPrepare(err ) ; return; } } if (mVideoTrack != NULL && mVideoSource == NULL) { status_t err = initVideoDecoder() ; if (err != OK) { abortPrepare(err ) ; return; } } if (mAudioTrack != NULL && mAudioSource == NULL) { status_t err = initAudioDecoder() ; if (err != OK) { abortPrepare(err ) ; return; } } modifyFlags(PREPARING_CONNECTED, SET) ; if (isStreamingHTTP() ) { postBufferingEvent_l() ; } else { finishAsyncPrepare_l() ; } }

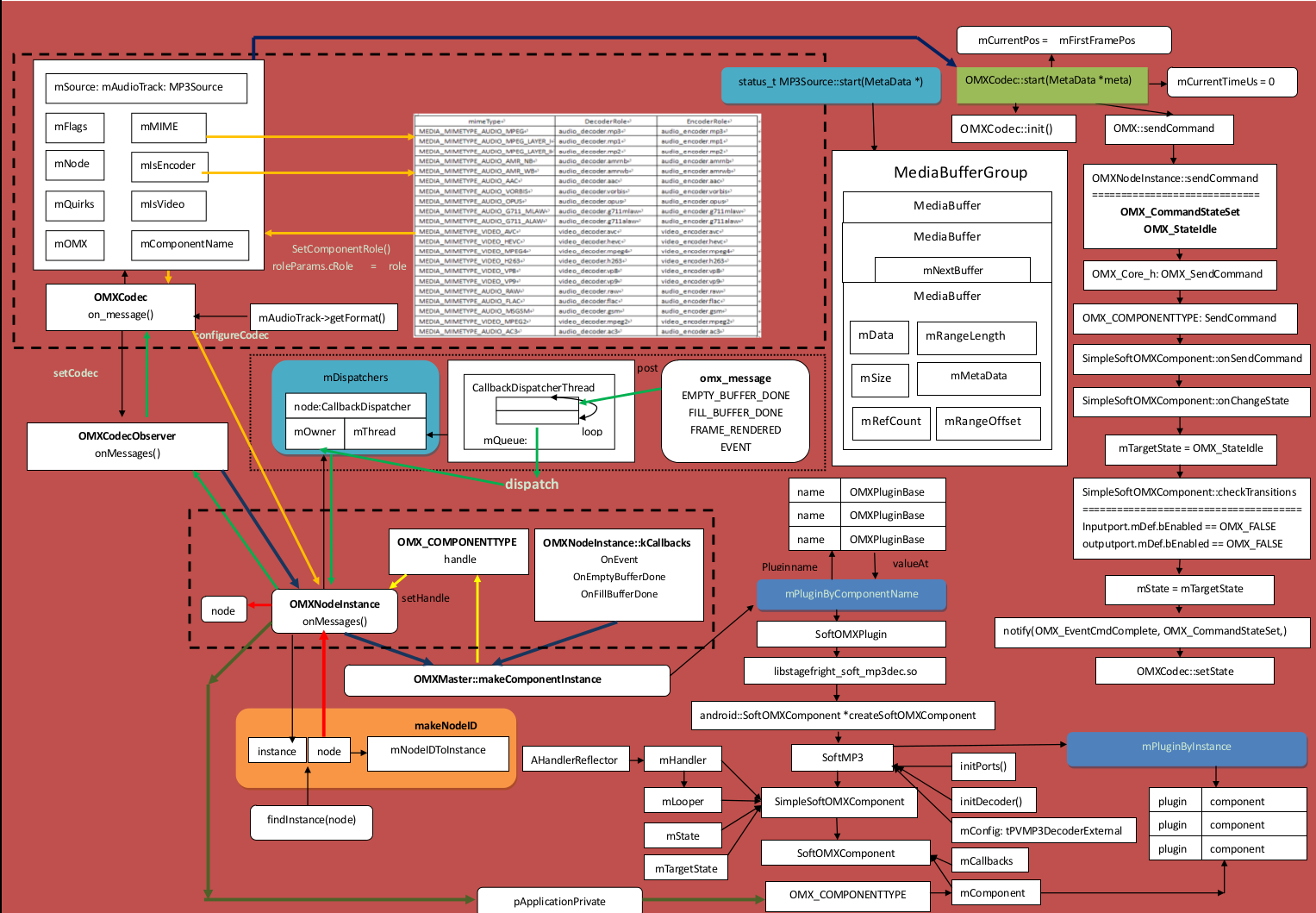

整个过程如下图所示:

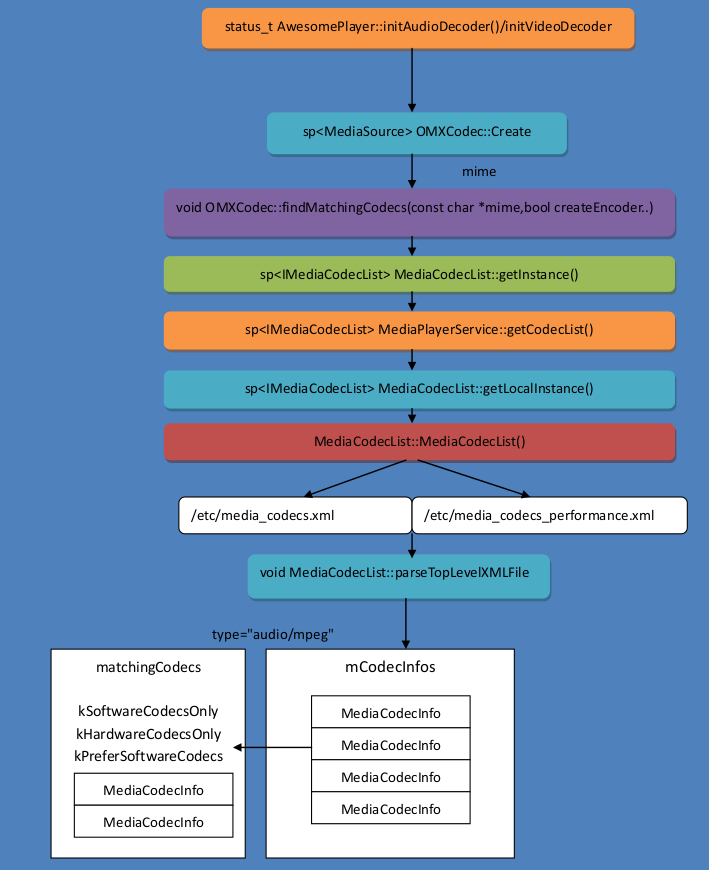

接下来我们重点看下解码器是怎样创建出来的,首先将会调用OMXCodec::Create来创建解码器。

status_t AwesomePlayer::initAudioDecoder() { ATRACE_CALL(); sp<MetaData> meta = mAudioTrack->getFormat(); const char *mime; CHECK(meta->findCString(kKeyMIMEType, &mime)); audio_stream_type_t streamType = AUDIO_STREAM_MUSIC; if (mAudioSink != NULL) { streamType = mAudioSink->getAudioStreamType(); } mOffloadAudio = canOffloadStream(meta, (mVideoSource != NULL), isStreamingHTTP(), streamType); if (!strcasecmp(mime, MEDIA_MIMETYPE_AUDIO_RAW)) { mAudioSource = mAudioTrack; } else { mOmxSource = OMXCodec::Create( mClient.interface (), mAudioTrack->getFormat(), false , mAudioTrack); if (mOffloadAudio) { mAudioSource = mAudioTrack; } else { mAudioSource = mOmxSource; } } if (mAudioSource != NULL) { int64_t durationUs; if (mAudioTrack->getFormat()->findInt64(kKeyDuration, &durationUs)) { Mutex::Autolock autoLock(mMiscStateLock); if (mDurationUs < 0 || durationUs > mDurationUs) { mDurationUs = durationUs; } } status_t err = mAudioSource->start(); } else if (!strcasecmp(mime, MEDIA_MIMETYPE_AUDIO_QCELP)) { return OK; } if (mAudioSource != NULL) { Mutex::Autolock autoLock(mStatsLock); TrackStat *stat = &mStats.mTracks.editItemAt(mStats.mAudioTrackIndex); const char *component; if (!mAudioSource->getFormat() ->findCString(kKeyDecoderComponent, &component)) { component = "none" ; } stat->mDecoderName = component; } return mAudioSource != NULL ? OK : UNKNOWN_ERROR; }

创建解码器之前我们需要获取当前播放文件的mimeType,然后根据这个mimeType查找对应的解码器,然后创建OMXCodecObserver,并将其赋给每个由allocateNode创建的解码器,并返回。

sp<MediaSource> OMXCodec::Create ( const sp<IOMX> &omx, const sp<MetaData> &meta, bool createEncoder, const sp<MediaSource> &source, const char *matchComponentName, uint32_t flags, const sp<ANativeWindow> &nativeWindow) const char *mime; bool success = meta->findCString (kKeyMIMEType, &mime); Vector<CodecNameAndQuirks> matchingCodecs; findMatchingCodecs (mime, createEncoder, matchComponentName, flags, &matchingCodecs); sp<OMXCodecObserver> observer = new OMXCodecObserver; IOMX::node_id node = 0 ; for (size_t i = 0 ; i < matchingCodecs.size (); ++i) { const char *componentNameBase = matchingCodecs[i].mName.string (); uint32_t quirks = matchingCodecs[i].mQuirks; const char *componentName = componentNameBase; status_t err = omx->allocateNode (componentName, observer, &node); if (err == OK) { sp<OMXCodec> codec = new OMXCodec ( omx, node, quirks, flags, createEncoder, mime, componentName, source, nativeWindow); observer->setCodec (codec); err = codec->configureCodec (meta); if (err == OK) { return codec; } } } return NULL ; }

解码器的匹配是调用findMatchingCodecs来实现的,在开始之前首先获取当前所拥有的编码器的列表,它主要是通过解析/etc/media_codecs.xml这个文件来获取的,然后调用findCodecByType来判断能够处理当前播放文件类型的解码器,并将这些解码器添加到matchingCodecs中,这样返回的就是支持当前播放文件类型的解码器。

void OMXCodec::findMatchingCodecs( const char *mime, bool createEncoder, const char *matchComponentName, uint32_t flags, Vector<CodecNameAndQuirks> *matchingCodecs) { matchingCodecs->clear(); const sp<IMediaCodecList> list = MediaCodecList::getInstance(); size_t index = 0 ; for (;;) { ssize_t matchIndex = list->findCodecByType(mime, createEncoder, index); if (matchIndex < 0 ) { break ; } index = matchIndex + 1 ; const sp<MediaCodecInfo> info = list->getCodecInfo(matchIndex); const char *componentName = info->getCodecName(); if (matchComponentName && strcmp(componentName, matchComponentName)) { continue ; } if (((flags & kSoftwareCodecsOnly) && IsSoftwareCodec(componentName)) || ((flags & kHardwareCodecsOnly) && !IsSoftwareCodec(componentName)) || (!(flags & (kSoftwareCodecsOnly | kHardwareCodecsOnly)))) { ssize_t index = matchingCodecs->add(); CodecNameAndQuirks *entry = &matchingCodecs->editItemAt(index); entry->mName = String8(componentName); entry->mQuirks = getComponentQuirks(info); ALOGV("matching '%s' quirks 0x%08x" , entry->mName.string (), entry->mQuirks); } } if (flags & kPreferSoftwareCodecs) { matchingCodecs->sort(CompareSoftwareCodecsFirst); } }

sp <IMediaCodecList > MediaCodecList ::getInstance () { Mutex ::Autolock _l (sRemoteInitMutex ); if sRemoteList == NULL sp <IBinder > binder = defaultServiceManager ()->getService (String16 ("media.player" )); sp <IMediaPlayerService > service = interface_cast <IMediaPlayerService >(binder ); if service.get () ! = NULL sRemoteList = service ->getCodecList (); } if sRemoteList == NULL sRemoteList = getLocalInstance (); } } return sRemoteList ; }

sp<IMediaCodecList> MediaPlayerService::getCodecList () const { return MediaCodecList::getLocalInstance (); }

sp<IMediaCodecList> MediaCodecList::getLocalInstance () { Mutex::Autolock autoLock (sInitMutex) ; if (sCodecList == NULL ) { MediaCodecList *codecList = new MediaCodecList; if (codecList->initCheck () == OK) { sCodecList = codecList; } else { delete codecList; } } return sCodecList; }

MediaCodecList::MediaCodecList() : mInitCheck(NO_INIT) , mUpdate(false ) , mGlobalSettings(new AMessage() ) { parseTopLevelXMLFile("/etc/media_codecs.xml" ) ; parseTopLevelXMLFile("/etc/media_codecs_performance.xml" , true ) ; parseTopLevelXMLFile(kProfilingResults , true / * ignore_errors * / ) ; }

ssize_t MediaCodecList::findCodecByType ( const char *type, bool encoder, size_t startIndex) const static const char *advancedFeatures[] = { "feature-secure-playback" , "feature-tunneled-playback" , }; size_t numCodecs = mCodecInfos.size (); for (; startIndex < numCodecs; ++startIndex) { const MediaCodecInfo &info = *mCodecInfos.itemAt (startIndex).get (); if (info.isEncoder () != encoder) { continue ; } sp<MediaCodecInfo::Capabilities> capabilities = info.getCapabilitiesFor (type); if (capabilities == NULL ) { continue ; } const sp<AMessage> &details = capabilities->getDetails (); int32_t required; bool isAdvanced = false ; for (size_t ix = 0 ; ix < ARRAY_SIZE (advancedFeatures); ix++) { if (details->findInt32 (advancedFeatures[ix], &required) && required != 0 ) { isAdvanced = true ; break ; } } if (!isAdvanced) { return startIndex; } } return -ENOENT; }

通过上述步骤只是过滤出能够支持当前播放文件类型的解码器信息,但是并没有对这些解码器进行实例化。解码器的实例化是通过如下代码片来完成的。

status_t err = omx->sp<OMXCodec> codec = new OMXCodec(omx, node, quirks, flags, createEncoder, mime, componentName, source, nativeWindow); observer ->err = codec->

在allocateNode开始的时候首先创建OMXNodeInstance对象,然后调用

status_t OMX::allocateNode( const char *name, const sp<IOMXObserver> &observer, node_id *node ) { Mutex ::Autolock autoLock(mLock); *node = 0 ; OMXNodeInstance *instance = new OMXNodeInstance(this, observer, name); OMX_COMPONENTTYPE *handle; OMX_ERRORTYPE err = mMaster->makeComponentInstance(name, &OMXNodeInstance::kCallbacks,instance, &handle); *node = makeNodeID (instance); mDispatchers.add(*node , new CallbackDispatcher(instance)); instance->setHandle(*node , handle ); mLiveNodes.add(IInterface::asBinder(observer), instance); IInterface::asBinder(observer)->linkToDeath(this); return OK; }

OMXNodeInstance构造方法比较简单这里就不详细介绍了。

OMXNodeInstance::OMXNodeInstance( OMX *owner , const sp<IOMXObserver> &observer, const char *name ) : mOwner(owner ), mNodeID(0 ), mHandle(NULL ), mObserver(observer), mDying(false ), mBufferIDCount(0 ) { mName = ADebug::GetDebugName(name ); DEBUG = ADebug::GetDebugLevelFromProperty(name , "debug.stagefright.omx-debug"); ALOGV("debug level for %s is %d", name , DEBUG ); DEBUG_BUMP = DEBUG ; mNumPortBuffers[0 ] = 0 ; mNumPortBuffers[1 ] = 0 ; mDebugLevelBumpPendingBuffers[0 ] = 0 ; mDebugLevelBumpPendingBuffers[1 ] = 0 ; mMetadataType[0 ] = kMetadataBufferTypeInvalid; mMetadataType[1 ] = kMetadataBufferTypeInvalid; }

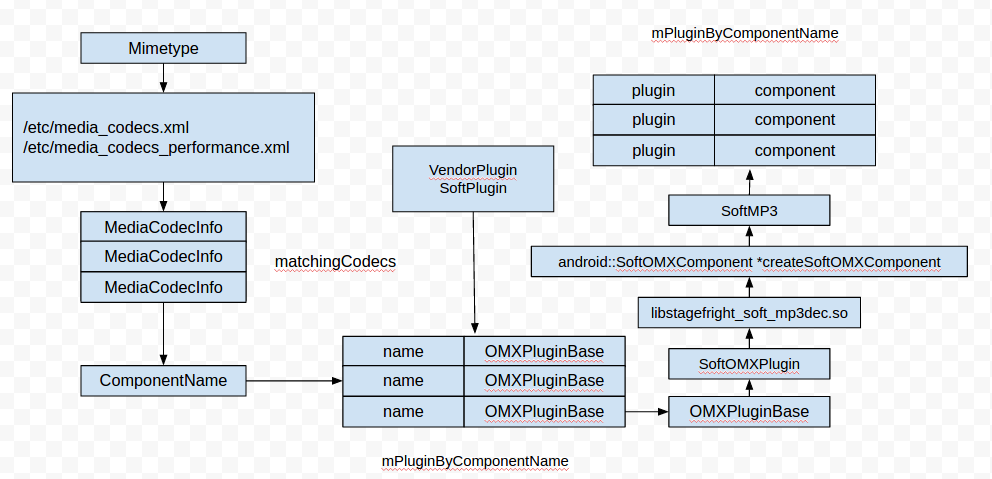

makeComponentInstance方法首先通过调用mPluginByComponentName.indexOfKey(String8(name))找到指定名字解码器的索引,然后调用mPluginByComponentName.valueAt(index);返回解码器实例。这个mPluginByComponentName是在创建AwesomePlayer的时候创建的。里面存放的是所支持的VentorPlugin以及SoftPlugin

OMX_ERRORTYPE OMXMaster::makeComponentInstance( const char *name, const OMX_CALLBACKTYPE *callbacks, OMX_PTR appData, OMX_COMPONENTTYPE **component) { Mutex::Autolock autoLock(mLock); ssize_t index = mPluginByComponentName.indexOfKey(String8(name)); OMXPluginBase *plugin = mPluginByComponentName.valueAt(index); OMX_ERRORTYPE err = plugin ->makeComponentInstance(name, callbacks, appData, component); if (err != OMX_ErrorNone) { return err ; } mPluginByInstance.add(*component, plugin ); return err ; }

我们以软解码器为例子来分析makeComponentInstance过程:

OMX_ERRORTYPE SoftOMXPlugin::makeComponentInstance ( const char *name, const OMX_CALLBACKTYPE *callbacks, OMX_PTR appData, OMX_COMPONENTTYPE **component) for (size_t i = 0 ; i < kNumComponents; ++i) { if (strcmp (name, kComponents[i].mName)) { continue ; } AString libName = "libstagefright_soft_" ; libName.append (kComponents[i].mLibNameSuffix); libName.append (".so" ); void *libHandle = dlopen (libName.c_str (), RTLD_NOW); typedef SoftOMXComponent *(*CreateSoftOMXComponentFunc)( const char *, const OMX_CALLBACKTYPE *, OMX_PTR, OMX_COMPONENTTYPE **); CreateSoftOMXComponentFunc createSoftOMXComponent = (CreateSoftOMXComponentFunc)dlsym ( libHandle, "_Z22createSoftOMXComponentPKcPK16OMX_CALLBACKTYPE" "PvPP17OMX_COMPONENTTYPE" ); sp<SoftOMXComponent> codec = (*createSoftOMXComponent)(name, callbacks, appData, component); OMX_ERRORTYPE err = codec->initCheck (); codec->incStrong (this ); codec->setLibHandle (libHandle); return OMX_ErrorNone; } return OMX_ErrorInvalidComponentName; }

static const struct { const char *mName; const char *mLibNameSuffix; const char *mRole; } kComponents[] = { { "OMX.google.aac.decoder" , "aacdec" , "audio_decoder.aac" }, { "OMX.google.aac.encoder" , "aacenc" , "audio_encoder.aac" }, { "OMX.google.amrnb.decoder" , "amrdec" , "audio_decoder.amrnb" }, { "OMX.google.amrnb.encoder" , "amrnbenc" , "audio_encoder.amrnb" }, { "OMX.google.amrwb.decoder" , "amrdec" , "audio_decoder.amrwb" }, { "OMX.google.amrwb.encoder" , "amrwbenc" , "audio_encoder.amrwb" }, { "OMX.google.h264.decoder" , "avcdec" , "video_decoder.avc" }, { "OMX.google.h264.encoder" , "avcenc" , "video_encoder.avc" }, { "OMX.google.hevc.decoder" , "hevcdec" , "video_decoder.hevc" }, { "OMX.google.g711.alaw.decoder" , "g711dec" , "audio_decoder.g711alaw" }, { "OMX.google.g711.mlaw.decoder" , "g711dec" , "audio_decoder.g711mlaw" }, { "OMX.google.mpeg2.decoder" , "mpeg2dec" , "video_decoder.mpeg2" }, { "OMX.google.h263.decoder" , "mpeg4dec" , "video_decoder.h263" }, { "OMX.google.h263.encoder" , "mpeg4enc" , "video_encoder.h263" }, { "OMX.google.mpeg4.decoder" , "mpeg4dec" , "video_decoder.mpeg4" }, { "OMX.google.mpeg4.encoder" , "mpeg4enc" , "video_encoder.mpeg4" }, { "OMX.google.mp3.decoder" , "mp3dec" , "audio_decoder.mp3" }, { "OMX.google.vorbis.decoder" , "vorbisdec" , "audio_decoder.vorbis" }, { "OMX.google.opus.decoder" , "opusdec" , "audio_decoder.opus" }, { "OMX.google.vp8.decoder" , "vpxdec" , "video_decoder.vp8" }, { "OMX.google.vp9.decoder" , "vpxdec" , "video_decoder.vp9" }, { "OMX.google.vp8.encoder" , "vpxenc" , "video_encoder.vp8" }, { "OMX.google.raw.decoder" , "rawdec" , "audio_decoder.raw" }, { "OMX.google.flac.encoder" , "flacenc" , "audio_encoder.flac" }, { "OMX.google.gsm.decoder" , "gsmdec" , "audio_decoder.gsm" }, };

每个软解码器都有一个createSoftOMXComponent方法。我们以MP3软解码器为例,在它内部通过 android::SoftMP3构造方法创建出MP3软解码器。

android::SoftOMXComponent *createSoftOMXComponent( const char *name, const OMX_CALLBACKTYPE *callbacks, OMX_PTR appData, OMX_COMPONENTTYPE **component) { return new android::SoftMP3(name, callbacks, appData, component); }

到这里估计大家会有点晕了吧,如果有点晕我这里再上个图做个小总结:

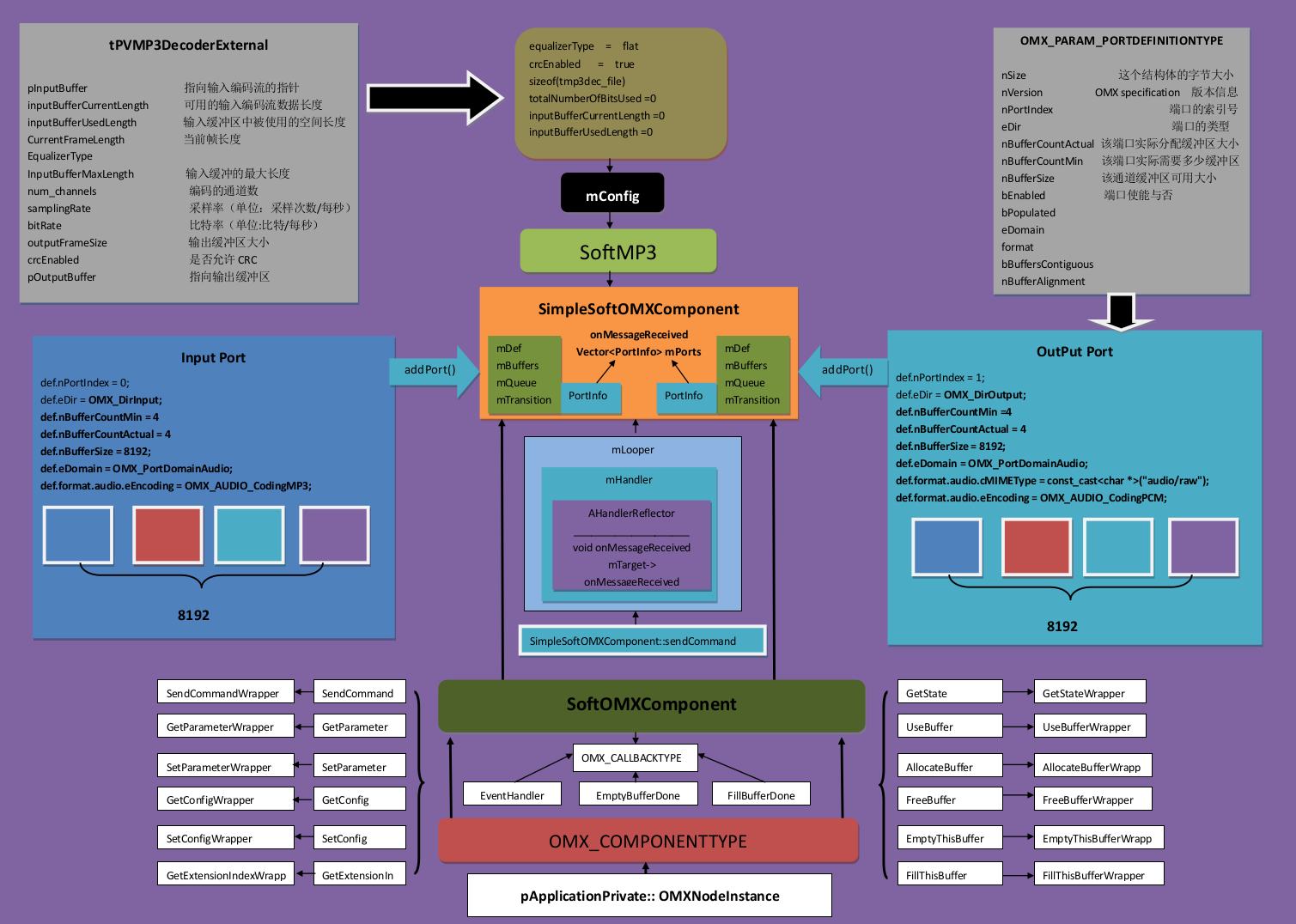

在MP3软编码器构造方法中最重要的有三个步骤

SimpleSoftOMXComponent的创建

initPorts();

initDecoder();SoftMP3::SoftMP3 ( const char *name, const OMX_CALLBACKTYPE *callbacks, OMX_PTR appData, OMX_COMPONENTTYPE **component) : SimpleSoftOMXComponent (name, callbacks, appData, component), mConfig (new tPVMP3DecoderExternal), mDecoderBuf (NULL), mAnchorTimeUs (0 ), mNumFramesOutput (0 ), mNumChannels (2 ), mSamplingRate (44100 ), mSignalledError (false), mSawInputEos (false), mSignalledOutputEos (false), mOutputPortSettingsChange (NONE) { initPorts(); initDecoder(); }

SimpleSoftOMXComponent::SimpleSoftOMXComponent( const char *name , const OMX_CALLBACKTYPE *callbacks, OMX_PTR appData, OMX_COMPONENTTYPE **component) : SoftOMXComponent(name , callbacks, appData, component), mLooper(new ALooper), mHandler(new AHandlerReflector<SimpleSoftOMXComponent>(this)), mState(OMX_StateLoaded), mTargetState(OMX_StateLoaded) { mLooper ->name ); mLooper -> mLooper -> false , false , ANDROID_PRIORITY_FOREGROUND); }

SoftOMXComponent::SoftOMXComponent( const char *name , const OMX_CALLBACKTYPE *callbacks, OMX_PTR appData, OMX_COMPONENTTYPE **component) : mName(name ), mCallbacks(callbacks), mComponent(new OMX_COMPONENTTYPE), mLibHandle(NULL) { mComponent -> mComponent ->1 ; mComponent ->0 ; mComponent ->0 ; mComponent ->0 ; mComponent -> mComponent -> mComponent -> mComponent -> mComponent -> mComponent -> mComponent -> mComponent -> mComponent -> mComponent -> mComponent -> mComponent -> mComponent -> mComponent -> mComponent -> mComponent -> mComponent -> mComponent -> mComponent -> mComponent -> *component = mComponent; }

OMX_CALLBACKTYPE OMXNodeInstance::kCallbacks = { & OnEvent, & OnEmptyBufferDone, & OnFillBufferDone };

void SoftMP3::initPorts() { OMX_PARAM_PORTDEFINITIONTYPE def ; InitOMXParams(&def ); def .nPortIndex = 0 ; def .eDir = OMX_DirInput; def .nBufferCountMin = kNumBuffers; def .nBufferCountActual = def .nBufferCountMin; def .nBufferSize = 8192 ; def .bEnabled = OMX_TRUE; def .bPopulated = OMX_FALSE; def .eDomain = OMX_PortDomainAudio; def .bBuffersContiguous = OMX_FALSE; def .nBufferAlignment = 1 ; def .format .audio.cMIMEType = const_cast<char *>(MEDIA_MIMETYPE_AUDIO_MPEG); def .format .audio.pNativeRender = NULL; def .format .audio.bFlagErrorConcealment = OMX_FALSE; def .format .audio.eEncoding = OMX_AUDIO_CodingMP3; addPort(def ); def .nPortIndex = 1 ; def .eDir = OMX_DirOutput; def .nBufferCountMin = kNumBuffers; def .nBufferCountActual = def .nBufferCountMin; def .nBufferSize = kOutputBufferSize; def .bEnabled = OMX_TRUE; def .bPopulated = OMX_FALSE; def .eDomain = OMX_PortDomainAudio; def .bBuffersContiguous = OMX_FALSE; def .nBufferAlignment = 2 ; def .format .audio.cMIMEType = const_cast<char *>("audio/raw" ); def .format .audio.pNativeRender = NULL; def .format .audio.bFlagErrorConcealment = OMX_FALSE; def .format .audio.eEncoding = OMX_AUDIO_CodingPCM; addPort(def ); }

void SoftMP3::initDecoder() { mConfig->equalizerType = flat; mConfig->crcEnabled = false ; uint32_t memRequirements = pvmp3_decoderMemRequirements() ; mDecoderBuf = malloc(memRequirements); pvmp3_InitDecoder(mConfig , mDecoderBuf ) ; mIsFirst = true ; }

我们回过头来看下allocateNode,在对应的解码器创建结束后调用makeNodeID为对应的node创建ID并添加到mNodeIDToInstance中。这里每个实例对应一个id

OMX::node_id OMX::makeNodeID(OMXNodeInstance *instance) { // mLock is already held. node_id node = (node_id )++mNodeCounter; mNodeIDToInstance.add(node , instance ); return node ; }

紧接着就是创建OMXCodec,在OMXCodec构造方法中调用setComponentRole,根据对应的mimeType,以及isEncoder来获取对应的Role Name,并对其进行初始化。

OMXCodec::OMXCodec ( const sp<IOMX> &omx, IOMX::node_id node, uint32_t quirks, uint32_t flags, bool isEncoder, const char *mime, const char *componentName, const sp<MediaSource> &source, const sp<ANativeWindow> &nativeWindow) : mOMX (omx), mPortStatus[kPortIndexInput] = ENABLED; mPortStatus[kPortIndexOutput] = ENABLED; setComponentRole (); }

void OMXCodec::setComponentRole ( const sp<IOMX> &omx, IOMX::node_id node, bool isEncoder, const char *mime) struct MimeToRole { const char *mime; const char *decoderRole; const char *encoderRole; }; static const MimeToRole kMimeToRole[] = { { MEDIA_MIMETYPE_AUDIO_MPEG, "audio_decoder.mp3" , "audio_encoder.mp3" }, { MEDIA_MIMETYPE_AUDIO_MPEG_LAYER_I, "audio_decoder.mp1" , "audio_encoder.mp1" }, { MEDIA_MIMETYPE_AUDIO_MPEG_LAYER_II, "audio_decoder.mp2" , "audio_encoder.mp2" }, { MEDIA_MIMETYPE_AUDIO_AMR_NB, "audio_decoder.amrnb" , "audio_encoder.amrnb" }, { MEDIA_MIMETYPE_AUDIO_AMR_WB, "audio_decoder.amrwb" , "audio_encoder.amrwb" }, { MEDIA_MIMETYPE_AUDIO_AAC, "audio_decoder.aac" , "audio_encoder.aac" }, { MEDIA_MIMETYPE_AUDIO_VORBIS, "audio_decoder.vorbis" , "audio_encoder.vorbis" }, { MEDIA_MIMETYPE_AUDIO_OPUS, "audio_decoder.opus" , "audio_encoder.opus" }, { MEDIA_MIMETYPE_AUDIO_G711_MLAW, "audio_decoder.g711mlaw" , "audio_encoder.g711mlaw" }, { MEDIA_MIMETYPE_AUDIO_G711_ALAW, "audio_decoder.g711alaw" , "audio_encoder.g711alaw" }, { MEDIA_MIMETYPE_VIDEO_AVC, "video_decoder.avc" , "video_encoder.avc" }, { MEDIA_MIMETYPE_VIDEO_HEVC, "video_decoder.hevc" , "video_encoder.hevc" }, { MEDIA_MIMETYPE_VIDEO_MPEG4, "video_decoder.mpeg4" , "video_encoder.mpeg4" }, { MEDIA_MIMETYPE_VIDEO_H263, "video_decoder.h263" , "video_encoder.h263" }, { MEDIA_MIMETYPE_VIDEO_VP8, "video_decoder.vp8" , "video_encoder.vp8" }, { MEDIA_MIMETYPE_VIDEO_VP9, "video_decoder.vp9" , "video_encoder.vp9" }, { MEDIA_MIMETYPE_AUDIO_RAW, "audio_decoder.raw" , "audio_encoder.raw" }, { MEDIA_MIMETYPE_AUDIO_FLAC, "audio_decoder.flac" , "audio_encoder.flac" }, { MEDIA_MIMETYPE_AUDIO_MSGSM, "audio_decoder.gsm" , "audio_encoder.gsm" }, { MEDIA_MIMETYPE_VIDEO_MPEG2, "video_decoder.mpeg2" , "video_encoder.mpeg2" }, { MEDIA_MIMETYPE_AUDIO_AC3, "audio_decoder.ac3" , "audio_encoder.ac3" }, }; static const size_t kNumMimeToRole = sizeof (kMimeToRole) / sizeof (kMimeToRole[0 ]); size_t i; for (i = 0 ; i < kNumMimeToRole; ++i) { if (!strcasecmp (mime, kMimeToRole[i].mime)) { break ; } } if (i == kNumMimeToRole) { return ; } const char *role = isEncoder ? kMimeToRole[i].encoderRole : kMimeToRole[i].decoderRole; if (role != NULL ) { OMX_PARAM_COMPONENTROLETYPE roleParams; InitOMXParams (&roleParams); strncpy ((char *)roleParams.cRole, role, OMX_MAX_STRINGNAME_SIZE - 1 ); roleParams.cRole[OMX_MAX_STRINGNAME_SIZE - 1 ] = '\0' ; status_t err = omx->setParameter ( node, OMX_IndexParamStandardComponentRole, &roleParams, sizeof (roleParams)); if (err != OK) { ALOGW ("Failed to set standard component role '%s'." , role); } } }

看完了解码器的创建过程,我们继续看下initAudioDecoder中的

status_t err = mAudioSource->status_t AwesomePlayer::initAudioDecoder() { if (!strcasecmp(mime, MEDIA_MIMETYPE_AUDIO_RAW)) { mAudioSource = mAudioTrack; } else { mOmxSource = OMXCodec::Create( mClient .interface(), mAudioTrack-> false , mAudioTrack); if (mOffloadAudio) { mAudioSource = mAudioTrack; } else { mAudioSource = mOmxSource; } } status_t err = mAudioSource-> }

从上面可以看出mAudioSource指的是mOmxSource,是创建出来的OMXCodec。而OMXCodec::Create返回值是一个OMXCodec对象。所以我们接下来看下OMXCodec的start方法.

status_t OMXCodec::start (MetaData *meta) { Mutex::Autolock autoLock(mLock); sp<MetaData> params = new MetaData; if ((err = mSource->start(params .get())) != OK) { return err; } return init(); }

在该方法中调用了mSource的start方法,以及init()方法,我们在该段代码中主要针对这两部分进行分析。同样我们在分析具体流程之前需要明确mSource到底指的是什么,这就需要从它的根源找起.

OMXCodec::OMXCodec ( const sp<IOMX> &omx, IOMX::node_id node, uint32_t quirks, uint32_t flags, bool isEncoder, const char *mime, const char *componentName, const sp<MediaSource> &source, const sp<ANativeWindow> &nativeWindow) : mOMX (omx), mSource (source), mPortStatus[kPortIndexInput] = ENABLED; mPortStatus[kPortIndexOutput] = ENABLED; setComponentRole (); }

sp<MediaSource> OMXCodec::Create( const sp<IOMX> &omx, const sp<MetaData> &meta, bool createEncoder, const sp<MediaSource> &source, const char *match ComponentName, uint32_t flags , const sp<ANativeWindow> &nativeWindow) { sp<OMXCodec> codec = new OMXCodec( omx, node, quirks, flags , createEncoder, mime, componentName, source, nativeWindow); }

status_t AwesomePlayer::initAudioDecoder() { mOmxSource = OMXCodec::Create( mClient.interface (), mAudioTrack->getFormat(), false , mAudioTrack); }

void AwesomePlayer::setAudioSource(sp<MediaSource> source ) { CHECK(source != NULL ); mAudioTrack = source ; }

在这里使用MediaExtractor对视频文件做A/V的分离

status_t AwesomePlayer::setDataSource_l(const sp <MediaExtractor> &extractor ) { for (size_t i = 0 ; i < extractor->countTracks() ; ++i) { if (!haveVideo && !strncasecmp(mime.string () , "video/" , 6 )) { setVideoSource(extractor ->getTrack (i ) ); } else if (!haveAudio && !strncasecmp(mime.string () , "audio/" , 6 )) { setAudioSource(extractor ->getTrack (i ) ); } return OK; }

上面是整个调用的过程,从上面可以看出最终的调用根源来自extractor->getTrack,假设当前播放歌曲的格式为MP3格式,那么extractor就是MP3Extractor,则mAudioTrack就是MP3Extractor::getTrack的返回值,也就是MP3Source,知道了这点我们就可以继续对prepare流程进行分析了。

sp<MediaSource> MP3Extractor::getTrack (size_t index) { if (mInitCheck != OK || index != 0 ) { return NULL ; } return new MP3Source ( mMeta, mDataSource, mFirstFramePos, mFixedHeader, mSeeker); }

MP3Source::start中主要创建出一个MediaBuffer然后调用MediaBufferGroup的add_buffer方法将其添加到MediaBufferGroup中。并且将一些相关的标志位置为初始状态。

status_t MP3Source::start(MetaData *) { CHECK(!mStarted ) ; mGroup = new MediaBufferGroup; mGroup->add_buffer(new MediaBuffer(kMaxFrameSize ) ); mCurrentPos = mFirstFramePos; mCurrentTimeUs = 0 ; mBasisTimeUs = mCurrentTimeUs; mSamplesRead = 0 ; mStarted = true ; return OK; }

接下来我们看下init方法。

status_t OMXCodec::init() { status_t err; if (!(mQuirks & kRequiresLoadedToIdleAfterAllocation)) { err = mOMX->sendCommand(mNode , OMX_CommandStateSet, OMX_StateIdle) ; setState(LOADED_TO_IDLE) ; } err = allocateBuffers() ; if (mQuirks & kRequiresLoadedToIdleAfterAllocation) { err = mOMX->sendCommand(mNode , OMX_CommandStateSet, OMX_StateIdle) ; setState(LOADED_TO_IDLE) ; } while (mState != EXECUTING && mState != ERROR) { mAsyncCompletion.wait(mLock); } return mState == ERROR ? UNKNOWN_ERROR : OK; }

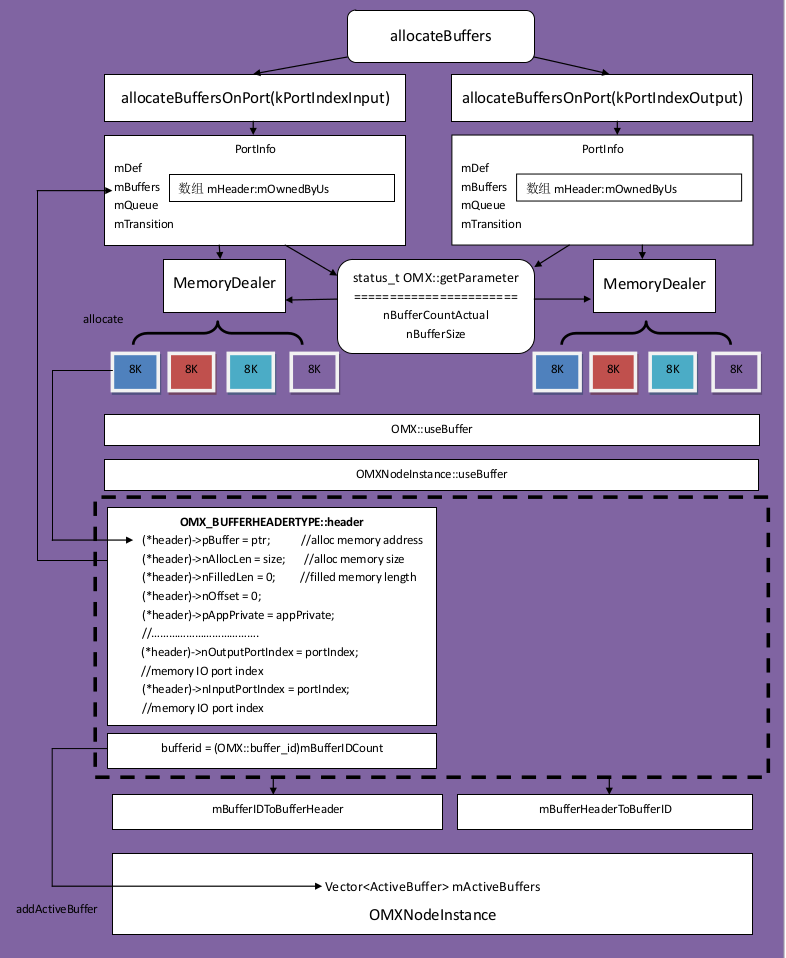

在init方法中主要是通过调用allocateBuffers来为输入输出端口分配缓存,紧接着调用mOMX->sendCommand将状态设置到底层。首先我们看下allocateBuffers方法:

status_t OMXCodec::allocateBuffers() { status_t err = allocateBuffersOnPort(kPortIndexInput ) ; return allocateBuffersOnPort(kPortIndexOutput ) ; }

在allocateBuffersOnPort中分别为输入输出端口分配指定大小的缓存空间并对其统一管理。

status_t OMXCodec::allocateBuffersOnPort (OMX_U32 portIndex) if (mNativeWindow != NULL && portIndex == kPortIndexOutput) { return allocateOutputBuffersFromNativeWindow (); } status_t err = OK; if ((mFlags & kStoreMetaDataInVideoBuffers) && portIndex == kPortIndexInput) { err = mOMX->storeMetaDataInBuffers (mNode, kPortIndexInput, OMX_TRUE); } OMX_PARAM_PORTDEFINITIONTYPE def; InitOMXParams (&def); def.nPortIndex = portIndex; err = mOMX->getParameter (mNode, OMX_IndexParamPortDefinition, &def, sizeof (def)); CODEC_LOGV ("allocating %u buffers of size %u on %s port" , def.nBufferCountActual, def.nBufferSize, portIndex == kPortIndexInput ? "input" : "output" ); if (def.nBufferSize != 0 && def.nBufferCountActual > SIZE_MAX / def.nBufferSize) { return BAD_VALUE; } size_t totalSize = def.nBufferCountActual * def.nBufferSize; mDealer[portIndex] = new MemoryDealer (totalSize, "OMXCodec" ); for (OMX_U32 i = 0 ; i < def.nBufferCountActual; ++i) { sp<IMemory> mem = mDealer[portIndex]->allocate (def.nBufferSize); BufferInfo info; info.mData = NULL ; info.mSize = def.nBufferSize; IOMX::buffer_id buffer; if (portIndex == kPortIndexInput && ((mQuirks & kRequiresAllocateBufferOnInputPorts) || (mFlags & kUseSecureInputBuffers))) { if (mOMXLivesLocally) { mem.clear (); err = mOMX->allocateBuffer ( mNode, portIndex, def.nBufferSize, &buffer, &info.mData); } else { err = mOMX->allocateBufferWithBackup ( mNode, portIndex, mem, &buffer, mem->size ()); } } else if (portIndex == kPortIndexOutput && (mQuirks & kRequiresAllocateBufferOnOutputPorts)) { if (mOMXLivesLocally) { mem.clear (); err = mOMX->allocateBuffer ( mNode, portIndex, def.nBufferSize, &buffer, &info.mData); } else { err = mOMX->allocateBufferWithBackup ( mNode, portIndex, mem, &buffer, mem->size ()); } } else { err = mOMX->useBuffer (mNode, portIndex, mem, &buffer, mem->size ()); } if (mem != NULL ) { info.mData = mem->pointer (); } info.mBuffer = buffer; info.mStatus = OWNED_BY_US; info.mMem = mem; info.mMediaBuffer = NULL ; mPortBuffers[portIndex].push (info); CODEC_LOGV ("allocated buffer %u on %s port" , buffer, portIndex == kPortIndexInput ? "input" : "output" ); } if (portIndex == kPortIndexOutput) { sp<MetaData> meta = mSource->getFormat (); int32_t delay = 0 ; if (!meta->findInt32 (kKeyEncoderDelay, &delay)) { delay = 0 ; } int32_t padding = 0 ; if (!meta->findInt32 (kKeyEncoderPadding, &padding)) { padding = 0 ; } int32_t numchannels = 0 ; if (delay + padding) { if (mOutputFormat->findInt32 (kKeyChannelCount, &numchannels)) { size_t frameSize = numchannels * sizeof (int16_t ); if (mSkipCutBuffer != NULL ) { size_t prevbuffersize = mSkipCutBuffer->size (); if (prevbuffersize != 0 ) { ALOGW ("Replacing SkipCutBuffer holding %zu bytes" , prevbuffersize); } } mSkipCutBuffer = new SkipCutBuffer (delay * frameSize, padding * frameSize); } } } if (portIndex == kPortIndexInput && (mFlags & kUseSecureInputBuffers)) { Vector<MediaBuffer *> buffers; for (size_t i = 0 ; i < def.nBufferCountActual; ++i) { const BufferInfo &info = mPortBuffers[kPortIndexInput].itemAt (i); MediaBuffer *mbuf = new MediaBuffer (info.mData, info.mSize); buffers.push (mbuf); } status_t err = mSource->setBuffers (buffers); } return OK; }

status_t OMX::allocateBuffer ( node_id node, OMX_U32 port_index, size_t size, buffer_id *buffer, void **buffer_data) return findInstance (node)->allocateBuffer (port_index, size, buffer, buffer_data); }

status_t OMXNodeInstance::allocateBuffer( OMX_U32 portIndex, size_t size, OMX::buffer_id *buffer, buffer_data) { Mutex: :Autolock autoLock(mLock); BufferMeta *buffer_meta = new BufferMeta(size); OMX_ERRORTYPE err = OMX_AllocateBuffer(mHandle, &header, portIndex, buffer_meta, size); if (err != OMX_ErrorNone) { CLOG_ERROR(allocateBuffer, err, BUFFER_FMT(portIndex, "%zu@" , size)); delete buffer_meta; buffer_meta = NULL; *buffer = 0 ; return StatusFromOMXError(err); } CHECK_EQ(header->pAppPrivate, buffer_meta); buffer = makeBufferID(header); *buffer_data = header->pBuffer; addActiveBuffer(portIndex, *buffer); sp <GraphicBufferSource> bufferSource(getGraphicBufferSource()); bufferSource != NULL && portIndex == kPortIndexInput) { bufferSource->addCodecBuffer(header); CLOG_BUFFER(allocateBuffer, NEW_BUFFER_FMT(*buffer, portIndex, "%zu@%p" , size, *buffer_data)); }

之前我们提到过我们创建一个Component的时候会调用initPort初始化端口参数,但是那时候还没为端口分配内存,仅仅只是参数设置而已,这里的init就开始为每个端口分配内存空间了,在空间分配的时候会先从内存中划分出一整块所需的总空间,然后再细分后调用addActiveBuffer将其分配给某个端口:

接下来我们看下sendCommand部分:

status_t OMX::sendCommand( node_id node , OMX_COMMANDTYPE cmd, OMX_S32 param) { return findInstance(node )->sendCommand (cmd, param); }

OMXNodeInstance *OMX::findInstance(node_id node ) { Mutex::Autolock autoLock(mLock ) ; ssize_t index = mNodeIDToInstance.indexOfKey(node ) ; return index < 0 ? NULL : mNodeIDToInstance.valueAt(index ) ; }

status_t OMXNodeInstance::sendCommand ( OMX_COMMANDTYPE cmd, OMX_S32 param) const sp<GraphicBufferSource>& bufferSource (getGraphicBufferSource()) if (bufferSource != NULL && cmd == OMX_CommandStateSet) { if (param == OMX_StateIdle) { bufferSource->omxIdle (); } else if (param == OMX_StateLoaded) { bufferSource->omxLoaded (); setGraphicBufferSource (NULL ); } } const char *paramString = cmd == OMX_CommandStateSet ? asString ((OMX_STATETYPE)param) : portString (param); CLOG_STATE (sendCommand, "%s(%d), %s(%d)" , asString (cmd), cmd, paramString, param); OMX_ERRORTYPE err = OMX_SendCommand (mHandle, cmd, param, NULL ); CLOG_IF_ERROR (sendCommand, err, "%s(%d), %s(%d)" , asString (cmd), cmd, paramString, param); return StatusFromOMXError (err); }

我们看到上述的OMXNodeInstance::sendCommand主要有两项工作:

调用 bufferSource->omxIdle();将状态从Executing到Idle,等待原先的解码结束,并不再发送数据到解码器中进行解码。

调用OMX_SendCommand继续后续的处理。 hComponent, \ Cmd, \ nParam, \ pCmdData) \ ((OMX_COMPONENTTYPE*)hComponent) ->\ hComponent, \ Cmd, \ nParam, \ pCmdData)

void OMXNodeInstance::setHandle(OMX::node_id node_id , OMX_HANDLETYPE handle ) { mNodeID = node_id; CLOG_LIFE(allocateNode , "handle=%p" , handle ) ; CHECK(mHandle == NULL) ; mHandle = handle; }

status_t OMX::allocateNode ( const char *name, const sp<IOMXObserver> &observer, node_id *node) { OMX_ERRORTYPE err = mMaster-> makeComponentInstance ( name, &OMXNodeInstance::kCallbacks, instance, &handle); instance-> setHandle (*node, handle); return OK; }

OMX_ERRORTYPE OMXMaster::makeComponentInstance( const char *name, const OMX_CALLBACKTYPE *callbacks, OMX_PTR appData, OMX_COMPONENTTYPE **component) { OMX_ERRORTYPE err = plugin ->makeComponentInstance(name, callbacks, appData, component); mPluginByInstance.add(*component, plugin ); return err ; }

OMX_ERRORTYPE SoftOMXPlugin::makeComponentInstance ( const char *name, const OMX_CALLBACKTYPE *callbacks, OMX_PTR appData, OMX_COMPONENTTYPE **component) sp<SoftOMXComponent> codec = (*createSoftOMXComponent)(name, callbacks, appData, component); return OMX_ErrorInvalidComponentName; }

android::SoftOMXComponent *createSoftOMXComponent( const char *name, const OMX_CALLBACKTYPE *callbacks, OMX_PTR appData, OMX_COMPONENTTYPE **component) { return new android::SoftMP3(name, callbacks, appData, component); }

SoftMP3::SoftMP3 ( const char *name, const OMX_CALLBACKTYPE *callbacks, OMX_PTR appData, OMX_COMPONENTTYPE **component) : SimpleSoftOMXComponent (name, callbacks, appData, component), mOutputPortSettingsChange (NONE) { initPorts (); initDecoder (); }

SimpleSoftOMXComponent::SimpleSoftOMXComponent( const char *name , const OMX_CALLBACKTYPE *callbacks, OMX_PTR appData, OMX_COMPONENTTYPE **component) : SoftOMXComponent(name , callbacks, appData, component), mLooper(new ALooper), mHandler(new AHandlerReflector<SimpleSoftOMXComponent>(this)), mState(OMX_StateLoaded), mTargetState(OMX_StateLoaded) { mLooper ->name ); mLooper -> mLooper -> false , false , ANDROID_PRIORITY_FOREGROUND); }

OMX_ERRORTYPE SimpleSoftOMXComponent::sendCommand( OMX_COMMANDTYPE cmd, OMX_U32 param, OMX_PTR data ) { CHECK(data == NULL); sp<AMessage> msg = new AMessage(kWhatSendCommand, mHandler); msg ->"cmd" , cmd); msg ->"param" , param); msg -> return OMX_ErrorNone; }

void SimpleSoftOMXComponent::onMessageReceived(const sp <AMessage> &msg ) { Mutex::Autolock autoLock(mLock ) ; uint32_t msgType = msg->what() ; ALOGV("msgType = %d" , msgType ) ; switch (msgType) { case kWhatSendCommand: { int32_t cmd, param; CHECK(msg ->findInt32 ("cmd" , &cmd ) ); CHECK(msg ->findInt32 ("param" , ¶m ) ); onSendCommand((OMX_COMMANDTYPE) cmd, (OMX_U32)param); break; } }

void SimpleSoftOMXComponent::onSendCommand( OMX_COMMANDTYPE cmd, OMX_U32 param ) { switch (cmd) { case OMX_CommandStateSet: { onChangeState((OMX_STATETYPE)param ); break ; } case OMX_CommandPortEnable: case OMX_CommandPortDisable: { onPortEnable(param , cmd == OMX_CommandPortEnable); break ; } case OMX_CommandFlush: { onPortFlush(param , true ); break ; } default : TRESPASS(); break ; } }

void SimpleSoftOMXComponent::on ChangeState(OMX_STATETYPE state ) { // We shouldn't be in a state transition already. CHECK_EQ((int)mState, (int)mTargetState); switch (mState) { case OMX_StateLoaded: CHECK_EQ((int)state , (int)OMX_StateIdle); break; case OMX_StateIdle: CHECK(state == OMX_StateLoaded || state == OMX_StateExecuting); break; case OMX_StateExecuting: { CHECK_EQ((int)state , (int)OMX_StateIdle); for (size_t i = 0 ; i < mPorts.size(); ++i) { onPortFlush(i, false /* sendFlushComplete */); } mState = OMX_StateIdle; notify(OMX_EventCmdComplete, OMX_CommandStateSet, state, NULL); break; } default: TRESPASS(); } mTargetState = state; checkTransitions(); }

void SimpleSoftOMXComponent::checkTransitions() { if (mState != mTargetState) { bool transitionComplete = true ; if (mState == OMX_StateLoaded) { CHECK_EQ((int )mTargetState, (int )OMX_StateIdle); for (size_t i = 0 ; i < mPorts.size(); ++i) { const PortInfo &port = mPorts.itemAt(i); if (port.mDef.bEnabled == OMX_FALSE) { continue ; } if (port.mDef.bPopulated == OMX_FALSE) { transitionComplete = false ; break ; } } } else if (mTargetState == OMX_StateLoaded) { } if (transitionComplete) { mState = mTargetState; if (mState == OMX_StateLoaded) { onReset(); } notify(OMX_EventCmdComplete, OMX_CommandStateSet, mState, NULL); } } for (size_t i = 0 ; i < mPorts.size(); ++i) { PortInfo *port = &mPorts.editItemAt(i); if (port->mTransition == PortInfo::DISABLING) { if (port->mBuffers.empty ()) { ALOGV("Port %zu now disabled." , i); port->mTransition = PortInfo::NONE; notify(OMX_EventCmdComplete, OMX_CommandPortDisable, i, NULL); onPortEnableCompleted(i, false ); } } else if (port->mTransition == PortInfo::ENABLING) { if (port->mDef.bPopulated == OMX_TRUE) { ALOGV("Port %zu now enabled." , i); port->mTransition = PortInfo::NONE; port->mDef.bEnabled = OMX_TRUE; notify(OMX_EventCmdComplete, OMX_CommandPortEnable, i, NULL); onPortEnableCompleted(i, true ); } } } }

这样就结束了吗?还没呢,我们上面看到的只是beginAsyncPrepare_l最后还有finishAsyncPrepare_l,这里主要完成通知上层prepare结束:

void AwesomePlayer::finishAsyncPrepare_l() { if (mIsAsyncPrepare) { if (mVideoSource == NULL) { notifyListener_l(MEDIA_SET_VIDEO_SIZE, 0, 0) ; } else { notifyVideoSize_l() ; } notifyListener_l(MEDIA_PREPARED) ; } mPrepareResult = OK; modifyFlags((PREPARING|PREPARE_CANCELLED|PREPARING_CONNECTED) , CLEAR); modifyFlags(PREPARED, SET) ; mAsyncPrepareEvent = NULL; mPreparedCondition.broadcast() ; if (mAudioTearDown) { if (mPrepareResult == OK) { if (mExtractorFlags & MediaExtractor::CAN_SEEK) { seekTo_l(mAudioTearDownPosition ) ; } if (mAudioTearDownWasPlaying) { modifyFlags(CACHE_UNDERRUN, CLEAR) ; play_l() ; } } mAudioTearDown = false ; } }

我们重点看下notifyListener_l(MEDIA_PREPARED);

void AwesomePlayer::notifyListener_l(int msg , int ext1 , int ext2 ) { if ((mListener != NULL) && !mAudioTearDown) { sp<MediaPlayerBase> listener = mListener.promote() ; if (listener != NULL) { listener->sendEvent(msg , ext1 , ext2 ) ; } } }

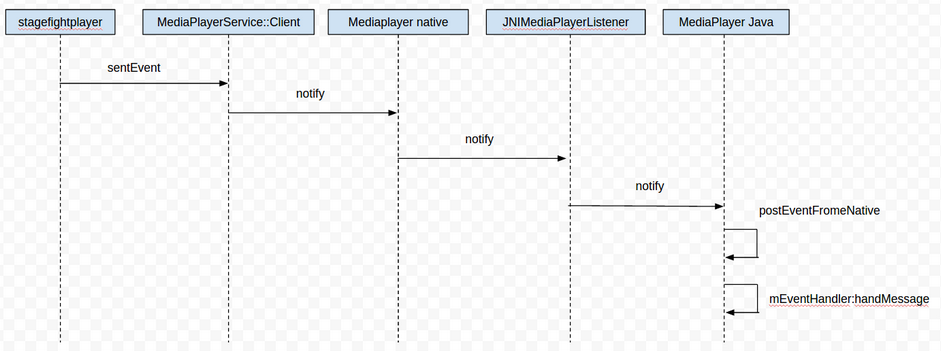

大家还记得下面这张图吧,我们从这张图上可以很明显看出整个调用的结束点为EventHandler

case MEDIA_PREPARED: try { scanInternalSubtitleTracks(); } catch (RuntimeException e) { Message msg2 = obtainMessage( MEDIA_ERROR, MEDIA_ERROR_UNKNOWN, MEDIA_ERROR_UNSUPPORTED, null ); sendMessage(msg2); } if (mOnPreparedListener != null ) mOnPreparedListener.onPrepared(mMediaPlayer); return ;