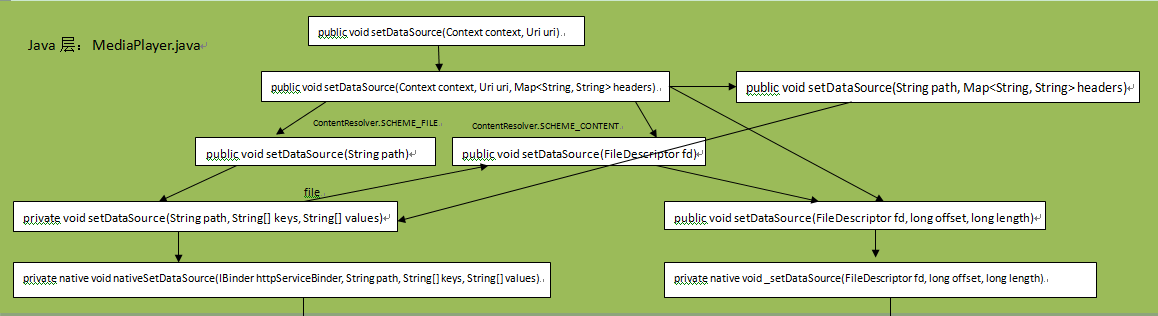

setDataSource–创建播放引擎,设置数据源 setDataSource可以使用文件路径,Url,以及Content Provider作为获取资源的标识,为了将流程简单化我们以文件的Uri作为参数进行传递。分析整个流程。

public void setDataSource(Context context , Uri uri ) throws IOException, IllegalArgumentException, SecurityException, IllegalStateException { setDataSource(context , uri , null ) ; }

我们这里假设走的是文件类型的分支:

public void setDataSource (Context context, Uri uri, Map<String, String> headers) throws IOException, IllegalArgumentException, SecurityException, IllegalStateException { final String scheme = uri.getScheme(); if (ContentResolver.SCHEME_FILE.equals(scheme)) { setDataSource(uri.getPath()); return ; } else if (ContentResolver.SCHEME_CONTENT.equals(scheme) && Settings.AUTHORITY.equals(uri.getAuthority())) { uri = RingtoneManager.getActualDefaultRingtoneUri(context, RingtoneManager.getDefaultType(uri)); if (uri == null ) { throw new FileNotFoundException ("Failed to resolve default ringtone" ); } } AssetFileDescriptor fd = null ; try { ContentResolver resolver = context.getContentResolver(); fd = resolver.openAssetFileDescriptor(uri, "r" ); if (fd == null ) { return ; } if (fd.getDeclaredLength() < 0 ) { setDataSource(fd.getFileDescriptor()); } else { setDataSource(fd.getFileDescriptor(), fd.getStartOffset(), fd.getDeclaredLength()); } return ; } catch (SecurityException | IOException ex) { Log.w(TAG, "Couldn't open file on client side; trying server side: " + ex); } finally { if (fd != null ) { fd.close(); } } setDataSource(uri.toString(), headers); }

public void setDataSource (String path) throws IOException, IllegalArgumentException, SecurityException, IllegalStateException { setDataSource(path, null , null ); }

private void setDataSource(String path, String [] keys, String [] values) throws IOException, IllegalArgumentException, SecurityException, IllegalStateException { final Uri uri = Uri .parse(path); final String scheme = uri.getScheme(); if ("file" .equals(scheme)) { path = uri.getPath(); } else if (scheme != null ) { nativeSetDataSource( MediaHTTPService.createHttpServiceBinderIfNecessary(path), path, keys, values); return ; } final File file = new File(path); if (file.exists()) { FileInputStream is = new FileInputStream(file); FileDescriptor fd = is .getFD(); setDataSource(fd); is .close(); } else { throw new IOException("setDataSource failed." ); } }

public void setDataSource (FileDescriptor fd) throws IOException, IllegalArgumentException, IllegalStateException { setDataSource(fd, 0 , 0x7ffffffffffffff L); }

public void setDataSource(FileDescriptor fd , long offset , long length ) throws IOException, IllegalArgumentException, IllegalStateException { _setDataSource(fd , offset , length ) ; }

private native void _setDataSource (FileDescriptor fd, long offset, long length) throws IOException, IllegalArgumentException, IllegalStateException ;

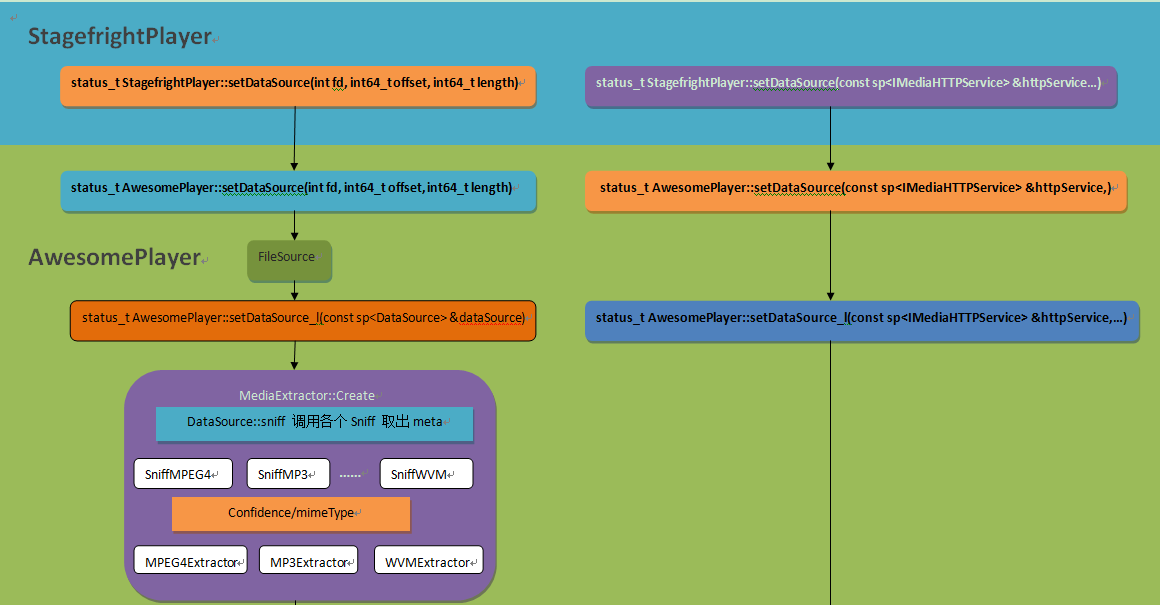

下面是整个setDataSource的大致路径图,如果看不清楚可以点击下面的图片再看,或者将图片保存在本地后放大看,因为内容太多所以画的时候容纳不下只能将其缩小:

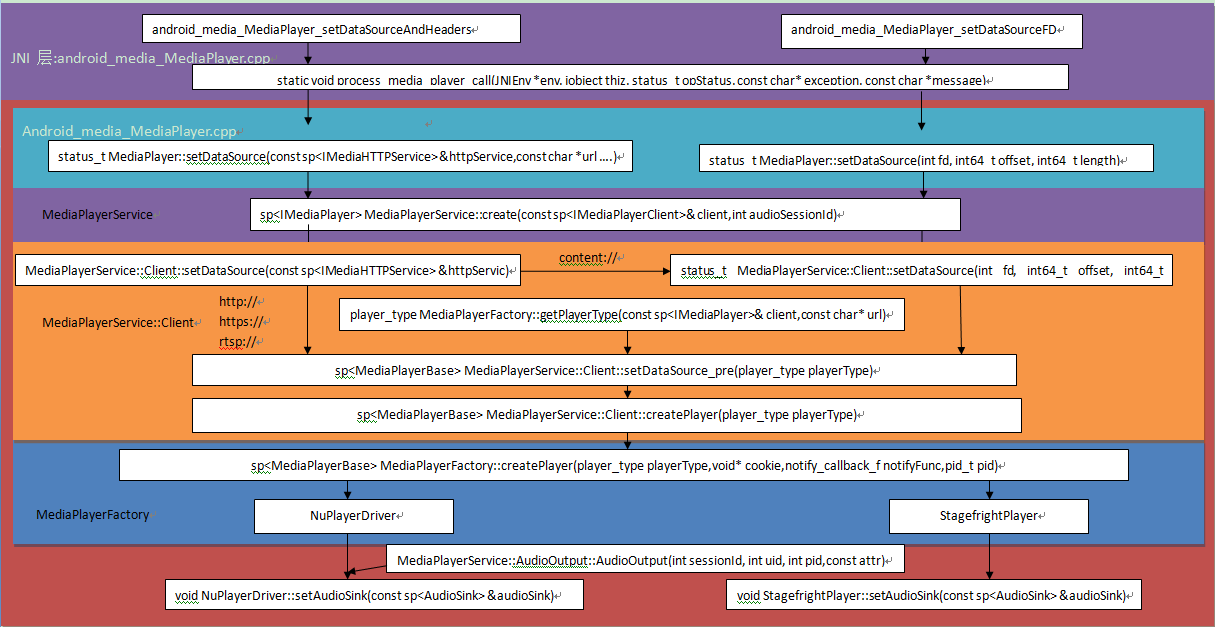

到这里我们已经准备进入JNI层了,在JNI部分调用的是

static void android_media_MediaPlayer_setDataSourceFD(JNIEnv * env , jobject thiz , jobject fileDescriptor , jlong offset , jlong length ) { sp<MediaPlayer> mp = getMediaPlayer(env , thiz ) ; int fd = jniGetFDFromFileDescriptor(env , fileDescriptor ) ; ALOGV("setDataSourceFD: fd %d" , fd ) ; process_media_player_call( env , thiz , mp ->setDataSource (fd , offset , length ) ,"java/io/IOException" , "setDataSourceFD failed." ); }

创建并加载播放引擎 static sp<MediaPlayer> getMediaPlayer(JNIEnv* env , jobject thiz ) { Mutex::Autolock l(sLock); MediaPlayer* const p = (MediaPlayer*)env->GetLongField(thiz , fields .context ) ; return sp<MediaPlayer>(p); }

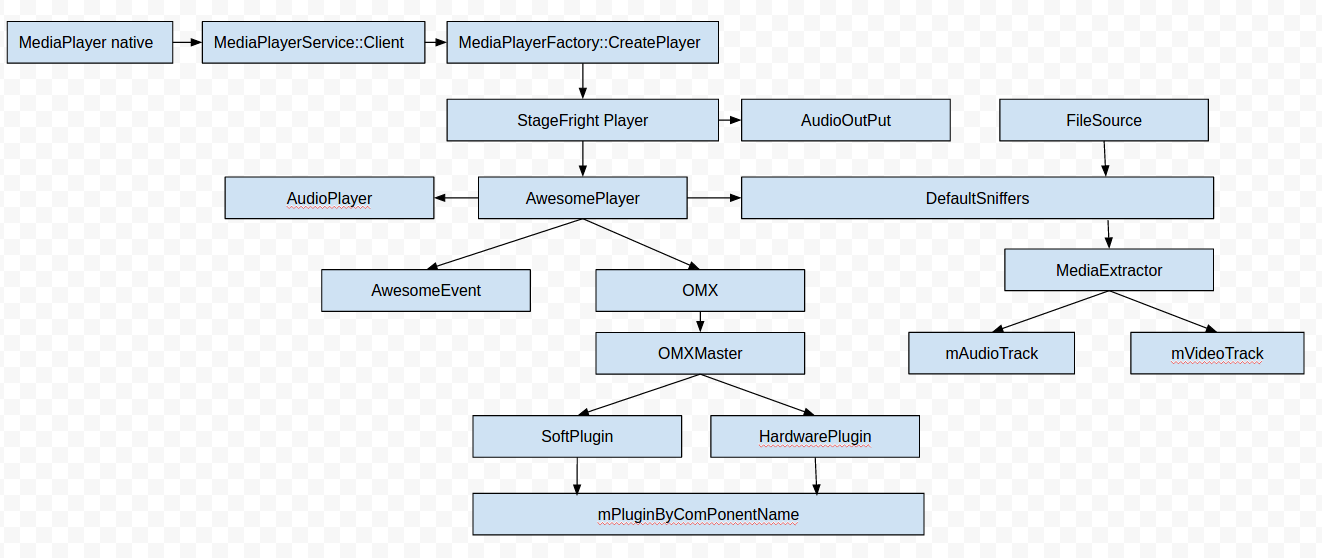

在native mediaplayer中首先获取MediaPlayerService,调用它的create方法通过MediaPlayerService::Client::Client方法创建出MediaPlayerService的客户端返回,赋给player。 因此player->setDataSource(httpService, url, headers)))中的player实际上是

status_t MediaPlayer::setDataSource ( const sp<IMediaHTTPService> &httpService, const char *url, const KeyedVector<String8, String8> *headers) status_t err = BAD_VALUE; if (url != NULL ) { const sp<IMediaPlayerService>& service (getMediaPlayerService()) if (service != 0 ) { sp<IMediaPlayer> player (service->create(this , mAudioSessionId)) ; if ((NO_ERROR != doSetRetransmitEndpoint (player)) || (NO_ERROR != player->setDataSource (httpService, url, headers))) { player.clear (); } err = attachNewPlayer (player); } } return err; }

我们先看下这个Client是如何创建的:

const sp<IMediaPlayerService>& IMediaDeathNotifier::getMediaPlayerService() { Mutex::Autolock _l(sServiceLock ) ; if (sMediaPlayerService == 0 ) { sp<IServiceManager> sm = defaultServiceManager() ; sp<IBinder> binder; do { binder = sm->getService(String16("media.player" ) ); if (binder != 0 ) { break; } usleep(500000 ); } while (true ); if (sDeathNotifier == NULL) { sDeathNotifier = new DeathNotifier() ; } binder->linkToDeath(sDeathNotifier ) ; sMediaPlayerService = interface_cast<IMediaPlayerService>(binder); } ALOGE_IF(sMediaPlayerService == 0, "no media player service!?" ) ; return sMediaPlayerService; }

首先是获取MediaPlayerService,它是在mediaserver的main方法中创建的这个会在后面进行介绍:

sp<IMediaPlayer> MediaPlayerService::create (const sp<IMediaPlayerClient>& client, int audioSessionId) pid_t pid = IPCThreadState::self ()->getCallingPid (); int32_t connId = android_atomic_inc (&mNextConnId); sp<Client > c = new Client ( this , pid, connId, client, audioSessionId, IPCThreadState::self ()->getCallingUid ()); wp<Client > w = c; { Mutex::Autolock lock (mLock) ; mClients.add (w); } return c; }

在上面代码中new了一个Client对象并添加到mClients中。下面是Client的构造方法,这里没啥可以介绍的:

MediaPlayerService::Client ::Client ( const sp<MediaPlayerService>& service, pid_t pid, int32_t connId, const sp<IMediaPlayerClient>& client, int audioSessionId, uid_t uid) { mPid = pid; mConnId = connId; mService = service; mClient = client; mLoop = false ; mStatus = NO_INIT; mAudioSessionId = audioSessionId; mUID = uid; mRetransmitEndpointValid = false ; mAudioAttributes = NULL ; #if CALLBACK_ANTAGONIZER ALOGD ("create Antagonizer" ); mAntagonizer = new Antagonizer (notify, this ); #endif }

在MediaPlayerService::Client::setDataSource方法中首先通过

status_t MediaPlayerService::Client::setDataSource(int fd , int64_t offset , int64_t length ) { struct stat sb; int ret = fstat(fd, &sb); if (ret != 0 ) { ALOGE("fstat(%d) failed: %d, %s" , fd , ret , strerror (errno ) ); return UNKNOWN_ERROR; } if (offset >= sb.st_size) { ALOGE("offset error" ) ; ::close(fd); return UNKNOWN_ERROR; } if (offset + length > sb.st_size) { length = sb.st_size - offset; ALOGV("calculated length = %lld" , length ) ; } player_type playerType = MediaPlayerFactory::getPlayerType(this ,fd ,offset ,length ) ; sp<MediaPlayerBase> p = setDataSource_pre(playerType ) ; if (p == NULL) { return NO_INIT; } setDataSource_post(p , p ->setDataSource (fd , offset , length ) ); return mStatus; }

首先我们看下getPlayerType,它传入的参数为IMediaPlayer类型的client参数以及一个url参数。而获得player类型是通过GET_PLAYER_TYPE_IMPL宏来实现的。

player_type MediaPlayerFactory::getPlayerType(const sp <IMediaPlayer>& client ,const char * url ) { GET_PLAYER_TYPE_IMPL(client , url ) ; }

sFactoryMap是包含每种类型Player的工厂类的数组,在GET_PLAYER_TYPE_IMPL中首先会遍历sFactoryMap并调用每个IFactory的scoreFactory方法对其进行评估找出最匹配的Player类型并返回。

Mutex::Autolock lock_(&sLock); \ \ player_type ret = STAGEFRIGHT_PLAYER; \ float bestScore = 0.0 ; \ \ for (size_t i = 0 ; i < sFactoryMap.size(); ++i) { \ \ IFactory* v = sFactoryMap.valueAt(i); \ float thisScore; \ CHECK(v != NULL); \ thisScore = v->scoreFactory(a, bestScore); \ if (thisScore > bestScore) { \ ret = sFactoryMap.keyAt(i); \ bestScore = thisScore; \ } \ } if (0.0 == bestScore) { \ ret = getDefaultPlayerType(); \ } \ return ret;

在获得播放器类型后在setDataSource_pre中调用createPlayer方法创建对应类型的player

sp<MediaPlayerBase> MediaPlayerService::Client::setDataSource_pre ( player_type playerType ) { ALOGV ("player type = %d" , playerType); sp<MediaPlayerBase> p = createPlayer (playerType); if (p == NULL) { return p; } if (!p-> hardwareOutput ()) { Mutex::Autolock l (mLock); mAudioOutput = new AudioOutput (mAudioSessionId, IPCThreadState::self ()-> getCallingUid (), mPid, mAudioAttributes); static_cast<MediaPlayerInterface*>(p.get ())-> setAudioSink (mAudioOutput); } return p; }

在上面代码中会创建一个音频播放硬件的抽象–AudioOutput,它负责将Buffer输出到硬件的接口,这个将会在介绍start方法的时候进行介绍:

在MediaPlayerService::Client::createPlayer中调用的是MediaPlayerFactory这个工厂类,根据传进去的playerType来创建对应的Player,

sp<MediaPlayerBase> MediaPlayerService::Client::createPlayer(player_type playerType ) { sp<MediaPlayerBase> p = mPlayer; if ((p != NULL) && (p->playerType() != playerType)) { p.clear() ; } if (p == NULL) { p = MediaPlayerFactory::createPlayer(playerType , this , notify , mPid ) ; } if (p != NULL) { p->setUID(mUID ) ; } return p; }

例如我们传入的是STAGEFRIGHT_PLAYER,那么将new出一个StagefrightPlayer.

virtual sp<MediaPlayerBase> createPlayer (pid_t ) return new StagefrightPlayer (); }

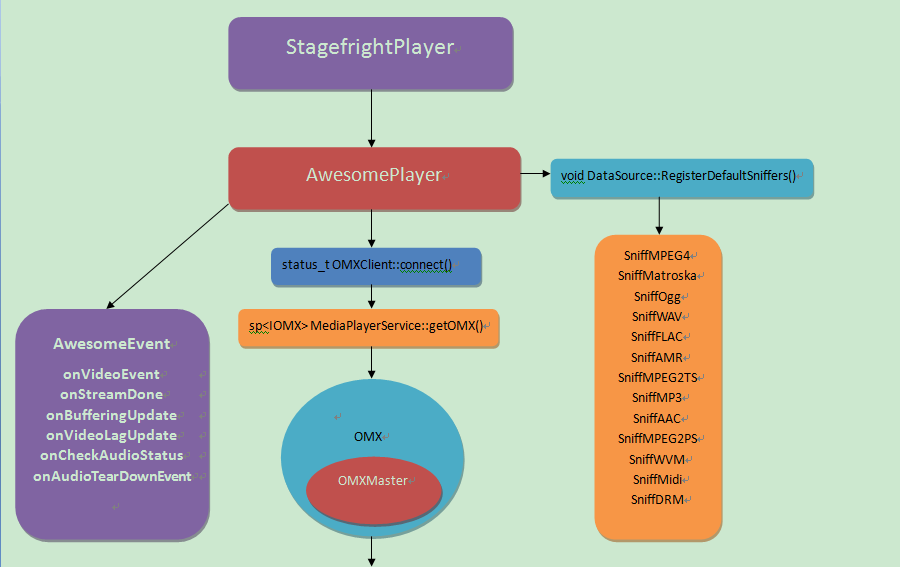

在StagefrightPlayer构造方法中 new 出了AwesomePlayer。

StagefrightPlayer::StagefrightPlayer() : mPlayer(new AwesomePlayer) { ALOGV("StagefrightPlayer" ) ; mPlayer->setListener(this ) ; }

下面这个图当时是连同NuPlayer一起画的,因为这部分逻辑两者还是一致的,如果大家暂时不想了解Nuplayer可以只看一边

在AwesomePlayer构造方法中通过RegisterDefaultSniffers注册了格式sniffer,创建出一系列AwesomeEvent,并通过mClient.connect()加载一系列编码器插件。

AwesomePlayer::AwesomePlayer () : mQueueStarted (false ), mUIDValid (false ), mTimeSource (NULL ), mVideoRenderingStarted (false ), mVideoRendererIsPreview (false ), mMediaRenderingStartGeneration (0 ), mStartGeneration (0 ), mAudioPlayer (NULL ), mDisplayWidth (0 ), mDisplayHeight (0 ), mVideoScalingMode (NATIVE_WINDOW_SCALING_MODE_SCALE_TO_WINDOW), mFlags (0 ), mExtractorFlags (0 ), mVideoBuffer (NULL ), mDecryptHandle (NULL ), mLastVideoTimeUs (-1 ), mTextDriver (NULL ), mOffloadAudio (false ), mAudioTearDown (false ) { CHECK_EQ (mClient.connect (), (status_t )OK); DataSource::RegisterDefaultSniffers (); mVideoEvent = new AwesomeEvent (this , &AwesomePlayer::onVideoEvent); mVideoEventPending = false ; mStreamDoneEvent = new AwesomeEvent (this , &AwesomePlayer::onStreamDone); mStreamDoneEventPending = false ; mBufferingEvent = new AwesomeEvent (this , &AwesomePlayer::onBufferingUpdate); mBufferingEventPending = false ; mVideoLagEvent = new AwesomeEvent (this , &AwesomePlayer::onVideoLagUpdate); mVideoLagEventPending = false ; mCheckAudioStatusEvent = new AwesomeEvent ( this , &AwesomePlayer::onCheckAudioStatus); mAudioStatusEventPending = false ; mAudioTearDownEvent = new AwesomeEvent (this , &AwesomePlayer::onAudioTearDownEvent); mAudioTearDownEventPending = false ; mClockEstimator = new WindowedLinearFitEstimator (); mPlaybackSettings = AUDIO_PLAYBACK_RATE_DEFAULT; reset (); }

void DataSource::RegisterDefaultSniffers() { Mutex::Autolock autoLock(gSnifferMutex ) ; if (gSniffersRegistered) { return; } RegisterSniffer_l(SniffMPEG4) ; RegisterSniffer_l(SniffMatroska) ; RegisterSniffer_l(SniffOgg) ; RegisterSniffer_l(SniffWAV) ; RegisterSniffer_l(SniffFLAC) ; RegisterSniffer_l(SniffAMR) ; RegisterSniffer_l(SniffMPEG2TS) ; RegisterSniffer_l(SniffMP3) ; RegisterSniffer_l(SniffAAC) ; RegisterSniffer_l(SniffMPEG2PS) ; RegisterSniffer_l(SniffWVM) ; RegisterSniffer_l(SniffMidi) ; char value[PROPERTY_VALUE_MAX ] ; if (property_get("drm.service.enabled" , value , NULL) && (!strcmp(value, "1" ) || !strcasecmp(value, "true" ))) { RegisterSniffer_l(SniffDRM) ; } gSniffersRegistered = true ; }

我们接下来看下OMXClient::connect方法。它是通过MediaPlayerService方法中的getOMX获取new 出来的OMX对象。在OMX对象中有个mMaster的成员变量,在创建它的时候调用addVendorPlugin以及addPlugin来加载软件以及硬件的解码器插件。

status_t OMXClient::connect() { sp<IServiceManager> sm = defaultServiceManager() ; sp<IBinder> binder = sm->getService(String16("media.player" ) ); sp<IMediaPlayerService> service = interface_cast<IMediaPlayerService>(binder); mOMX = service->getOMX() ; if (!mOMX->livesLocally(0 / * node * / , getpid () )) { ALOGI("Using client-side OMX mux." ) ; mOMX = new MuxOMX(mOMX ) ; } return OK; }

sp<IOMX> MediaPlayerService::getOMX () { Mutex::Autolock autoLock (mLock) ; if (mOMX.get () == NULL ) { mOMX = new OMX; } return mOMX; }

OMX::OMX () : mMaster (new OMXMaster), mNodeCounter (0 ) { }

OMXMaster ::OMXMaster () : mVendorLibHandle (NULL addVendorPlugin (); addPlugin (new SoftOMXPlugin ); }

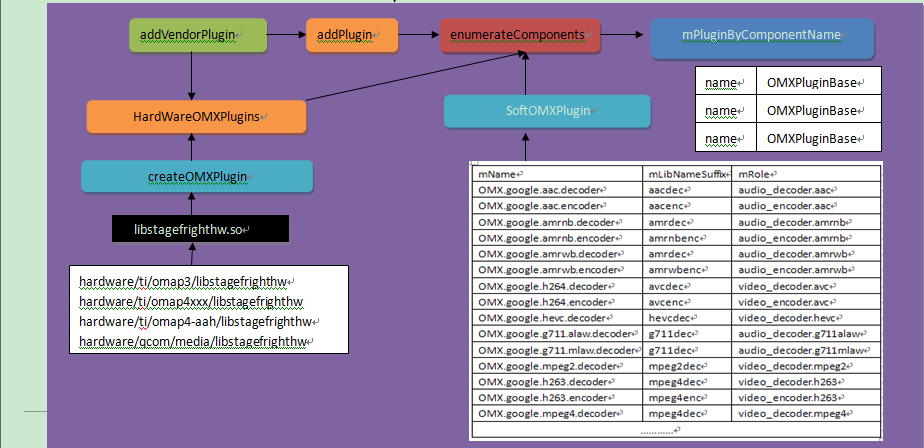

我们看下VendorPlugin的创建过程,首先它会调用dlopen打开libstagefrighthw.so动态库,然后调用里面的createOMXPlugin方法,创建出OMXPluginBase类型的对象,然后通过OMXMaster::addPlugin(OMXPluginBase *plugin)方法将其添加到mPluginByComponentName中。

void OMXMaster ::addVendorPlugin ( addPlugin ("libstagefrighthw.so" ); }

void OMXMaster::addPlugin(const char * libname ) { mVendorLibHandle = dlopen(libname, RTLD_NOW); typedef OMXPluginBase *(*CreateOMXPluginFunc)() ; CreateOMXPluginFunc createOMXPlugin = (CreateOMXPluginFunc)dlsym(mVendorLibHandle, "createOMXPlugin" ); if (!createOMXPlugin) createOMXPlugin = (CreateOMXPluginFunc)dlsym( mVendorLibHandle, "_ZN7android15createOMXPluginEv" ); if (createOMXPlugin) { addPlugin((* createOMXPlugin ) () ); } }

在OMXMaster::addPlugin(OMXPluginBase *plugin)方法中将会调用enumerateComponents方法列出VentorPlugin或者SoftPlugin中的所有Components,添加到mPluginByComponentName

void OMXMaster::addPlugin(OMXPluginBase *plugin) { Mutex::Autolock autoLock(mLock); mPlugins.push_back(plugin); OMX_U32 index = 0 ; char name [128 ]; OMX_ERRORTYPE err; while ((err = plugin->enumerateComponents( name , sizeof(name ), index ++)) == OMX_ErrorNone) { String8 name8(name ); if (mPluginByComponentName.indexOfKey(name8) >= 0 ) { ALOGE("A component of name '%s' already exists, ignoring this one.", name8.string()); continue ; } mPluginByComponentName.add (name8, plugin); } }

到目前为止我们在Client部分根据Uri类型找到匹配的player类型,并根据这个类型调用对应的工厂类,创建出对应的player,这里以MP3格式为例,那么创建出的将是StagefrightPlayer类型的播放器,在创建过程中new出了带有AwesomeEvent的AwesomePlayer,并注册了格式sniffer以及完成了VentorPlugin以及SoftPlugin名的加载。

加载数据源 播放引擎加载结束后就需要为播放引擎添加数据源了。

status_t StagefrightPlayer::setDataSource ( const sp<IMediaHTTPService> &httpService, const char *url, const KeyedVector<String8, String8> *headers) return mPlayer->setDataSource (httpService, url, headers); }

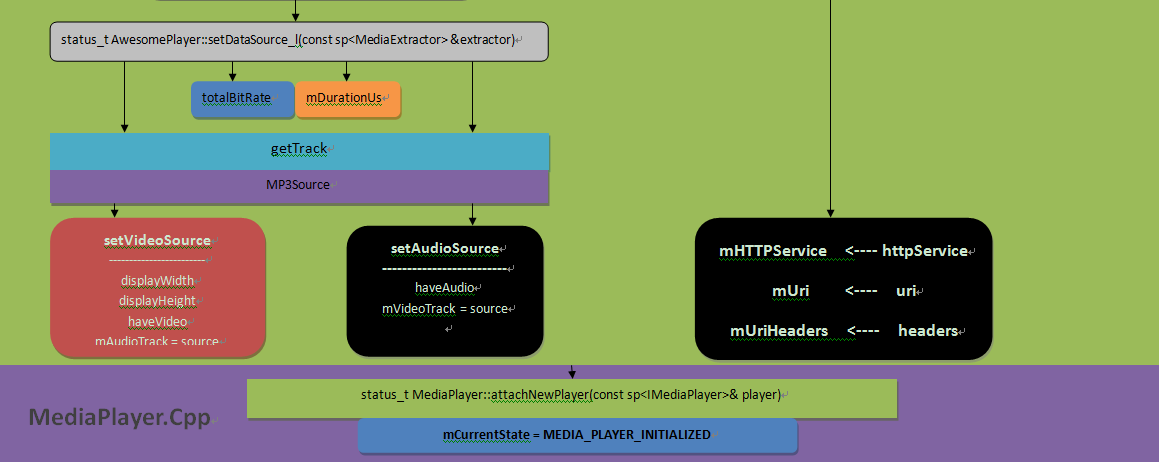

下面是AwesomePlayer类中setDataSource,它经过层层调用最终new出一个FileSource 对象赋给mFileSource。并通过MediaExtractor::Create创建出一个Extractor从FileSource中抽取出如,比特率等参数。

status_t AwesomePlayer::setDataSource ( const sp<IMediaHTTPService> &httpService, const char *uri, const KeyedVector<String8, String8> *headers) Mutex::Autolock autoLock (mLock) ; return setDataSource_l (httpService, uri, headers); }

status_t AwesomePlayer::setDataSource ( int fd, int64_t offset, int64_t length) Mutex::Autolock autoLock (mLock) ; reset_l (); sp<DataSource> dataSource = new FileSource (fd, offset, length); status_t err = dataSource->initCheck (); mFileSource = dataSource; { Mutex::Autolock autoLock (mStatsLock) ; mStats.mFd = fd; mStats.mURI = String8 (); } return setDataSource_l (dataSource); }

status_t AwesomePlayer ::setDataSource_l ( const sp <DataSource > &dataSource ) { sp <MediaExtractor > extractor = MediaExtractor ::Create (dataSource ); if extractor ->getDrmFlag ()) { checkDrmStatus (dataSource ); } return setDataSource_l (extractor ); }

创建MediaExtractor需要调用各个sniff方法判断出当前数据源的类型,然后根据mime创建对应的MediaExtractor,比如我们当前的数据源为MP3类型那么返回的将是MP3Extractor

sp<MediaExtractor> MediaExtractor ::Create ( const sp<DataSource> &source, const char *mime) { sp<AMessage> meta; String8 tmp; if (mime == NULL ) { float confidence; if (!source->sniff (&tmp, &confidence, &meta)) { ALOGV ("FAILED to autodetect media content." ); return NULL ; } mime = tmp.string (); ALOGV ("Autodetected media content as '%s' with confidence %.2f" , mime, confidence); } bool isDrm = false ; if (!strncmp (mime, "drm+" , 4 )) { const char *originalMime = strchr (mime+4 , '+' ); if (originalMime == NULL ) { return NULL ; } ++originalMime; if (!strncmp (mime, "drm+es_based+" , 13 )) { return new DRMExtractor (source, originalMime); } else if (!strncmp (mime, "drm+container_based+" , 20 )) { mime = originalMime; isDrm = true ; } else { return NULL ; } } MediaExtractor *ret = NULL ; if (!strcasecmp (mime, MEDIA_MIMETYPE_CONTAINER_MPEG4) || !strcasecmp (mime, "audio/mp4" )) { ret = new MPEG4Extractor (source); } else if (!strcasecmp (mime, MEDIA_MIMETYPE_AUDIO_MPEG)) { ret = new MP3Extractor (source, meta); } else if (!strcasecmp (mime, MEDIA_MIMETYPE_AUDIO_AMR_NB) || !strcasecmp (mime, MEDIA_MIMETYPE_AUDIO_AMR_WB)) { ret = new AMRExtractor (source); } else if (!strcasecmp (mime, MEDIA_MIMETYPE_AUDIO_FLAC)) { ret = new FLACExtractor (source); } else if (!strcasecmp (mime, MEDIA_MIMETYPE_CONTAINER_WAV)) { ret = new WAVExtractor (source); } else if (!strcasecmp (mime, MEDIA_MIMETYPE_CONTAINER_OGG)) { ret = new OggExtractor (source); } else if (!strcasecmp (mime, MEDIA_MIMETYPE_CONTAINER_MATROSKA)) { ret = new MatroskaExtractor (source); } else if (!strcasecmp (mime, MEDIA_MIMETYPE_CONTAINER_MPEG2TS)) { ret = new MPEG2TSExtractor (source); } else if (!strcasecmp (mime, MEDIA_MIMETYPE_CONTAINER_WVM)) { return new WVMExtractor (source); } else if (!strcasecmp (mime, MEDIA_MIMETYPE_AUDIO_AAC_ADTS)) { ret = new AACExtractor (source, meta); } else if (!strcasecmp (mime, MEDIA_MIMETYPE_CONTAINER_MPEG2PS)) { ret = new MPEG2PSExtractor (source); } else if (!strcasecmp (mime, MEDIA_MIMETYPE_AUDIO_MIDI)) { ret = new MidiExtractor (source); } if (ret != NULL ) { if (isDrm) { ret->setDrmFlag (true ); } else { ret->setDrmFlag (false ); } } return ret; }

有了MP3Extractor之后我们就可以从Media文件中抽取文件关键信息了,这里最关键的是extractor->getTrack(i)这个会返回对应的歌曲内容,通过setAudioSource以及setVideoSource赋值给播放引擎,播放引擎后续将会将这个数据源作为解码器的输入。进行解码

status_t AwesomePlayer::setDataSource_l(const sp<MediaExtractor> &extractor) { int64_t totalBitRate = 0 ; mExtractor = extractor; for (size_t i = 0 ; i < extractor->countTracks(); ++i) { sp<MetaData> meta = extractor->getTrackMetaData(i); int32_t bitrate; if (!meta->findInt32(kKeyBitRate, &bitrate)) { const char *mime; CHECK(meta->findCString(kKeyMIMEType, &mime)); totalBitRate = -1 ; break ; } totalBitRate += bitrate; } sp<MetaData> fileMeta = mExtractor->getMetaData(); if (fileMeta != NULL) { int64_t duration; if (fileMeta->findInt64(kKeyDuration, &duration)) { mDurationUs = duration; } } mBitrate = totalBitRate; ALOGV("mBitrate = %lld bits/sec" , (long long )mBitrate); { Mutex::Autolock autoLock(mStatsLock); mStats.mBitrate = mBitrate; mStats.mTracks.clear(); mStats.mAudioTrackIndex = -1 ; mStats.mVideoTrackIndex = -1 ; } bool haveAudio = false ; bool haveVideo = false ; for (size_t i = 0 ; i < extractor->countTracks(); ++i) { sp<MetaData> meta = extractor->getTrackMetaData(i); const char *_mime; CHECK(meta->findCString(kKeyMIMEType, &_mime)); String8 mime = String8(_mime); if (!haveVideo && !strncasecmp(mime.string (), "video/" , 6 )) { setVideoSource(extractor->getTrack(i)); haveVideo = true ; int32_t displayWidth, displayHeight; bool success = meta->findInt32(kKeyDisplayWidth, &displayWidth); if (success) { success = meta->findInt32(kKeyDisplayHeight, &displayHeight); } if (success) { mDisplayWidth = displayWidth; mDisplayHeight = displayHeight; } { Mutex::Autolock autoLock(mStatsLock); mStats.mVideoTrackIndex = mStats.mTracks.size(); mStats.mTracks.push(); TrackStat *stat = &mStats.mTracks.editItemAt(mStats.mVideoTrackIndex); stat->mMIME = mime.string (); } } else if (!haveAudio && !strncasecmp(mime.string (), "audio/" , 6 )) { setAudioSource(extractor->getTrack(i)); haveAudio = true ; mActiveAudioTrackIndex = i; { Mutex::Autolock autoLock(mStatsLock); mStats.mAudioTrackIndex = mStats.mTracks.size(); mStats.mTracks.push(); TrackStat *stat = &mStats.mTracks.editItemAt(mStats.mAudioTrackIndex); stat->mMIME = mime.string (); } if (!strcasecmp(mime.string (), MEDIA_MIMETYPE_AUDIO_VORBIS)) { sp<MetaData> fileMeta = extractor->getMetaData(); int32_t loop ; if (fileMeta != NULL && fileMeta->findInt32(kKeyAutoLoop, &loop ) && loop != 0 ) { modifyFlags(AUTO_LOOPING, SET); } } } else if (!strcasecmp(mime.string (), MEDIA_MIMETYPE_TEXT_3GPP)) { addTextSource_l(i, extractor->getTrack(i)); } } if (!haveAudio && !haveVideo) { if (mWVMExtractor != NULL) { return mWVMExtractor->getError(); } else { return UNKNOWN_ERROR; } } mExtractorFlags = extractor->flags(); return OK; }

void AwesomePlayer::setAudioSource(sp<MediaSource> source ) { CHECK(source != NULL ); mAudioTrack = source ; }

void MediaPlayerService::Client ::setDataSource_post ( const sp<MediaPlayerBase>& p, status_t status) { ALOGV (" setDataSource" ); mStatus = status; if (mStatus != OK) { ALOGE (" error: %d" , mStatus); return ; } if (mRetransmitEndpointValid) { mStatus = p->setRetransmitEndpoint (&mRetransmitEndpoint); if (mStatus != NO_ERROR) { ALOGE ("setRetransmitEndpoint error: %d" , mStatus); } } if (mStatus == OK) { mPlayer = p; } }

最后贴个setDataSource整个过程的结构图: